But Why?

As a procedure, sp_WhoIsActive is pretty perfect. It’s not all things to all people, but it does what it’s supposed to do really well.

One thing I really like about it is that you can change a couple things about the way it returns data to you (or to a logging table) in different ways:

- The set of columns

- The sorting of columns

There are definitely good uses for these options, especially when you’re beyond the “what the hell is going on here?” phase and on to the “troubleshooting a specific problem” phase.

Just as a couple examples, if you were specifically troubleshooting:

- tempdb issues, you’d wanna sort and focus on tempdb related columns

- blocking issues, you’d wanna sort and focus on blocking related columns

Let’s take a look at how to do that.

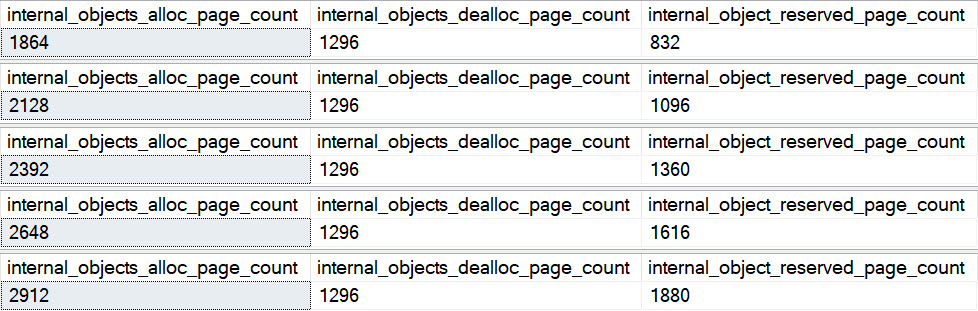

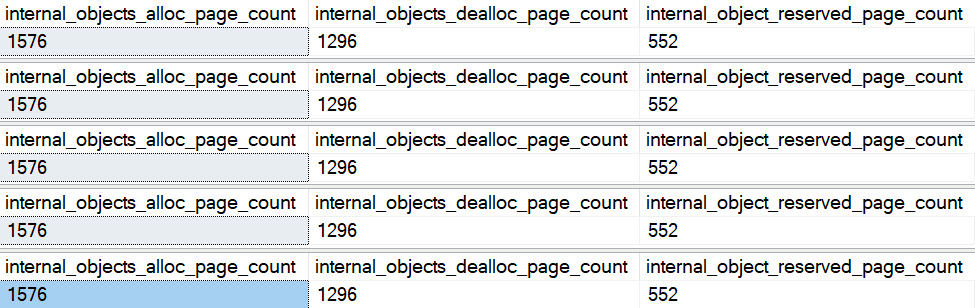

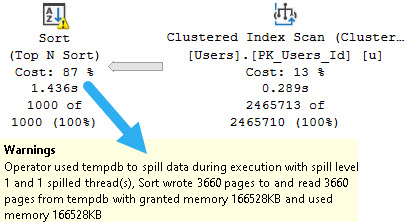

Focus On tempdb

If you want to focus on just what’s using a lot of tempdb without any other noise, and you want to find out what’s using the most tempdb right at the top, you can do this:

EXEC sp_WhoIsActive

@sort_order = '[tempdb_current] DESC',

@get_plans = 1,

@output_column_list = '[start_time][session_id][sql_text][query_plan][wait_info][temp%]';

It will:

- Sort the results by what currently has the most stuff in tempdb

- Only output columns related to tempdb use with some other identifying details

What’s nice about this is that not only does it help you totally focus right in on what you care about.

And if you’re taking screenshots to document what hellish things are happening, you don’t have to spend a bunch of time editing them to cut distractions out.

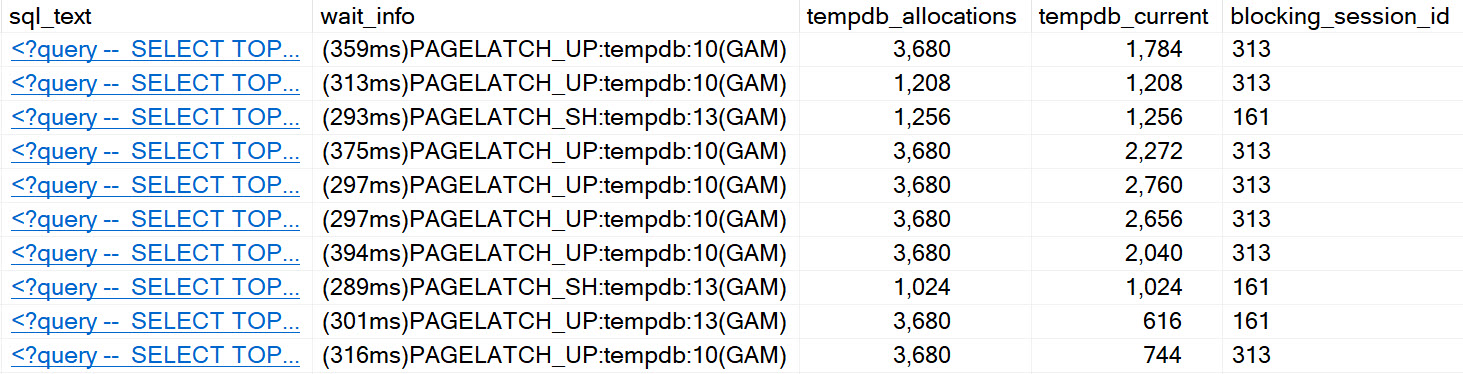

Focus On Blocking

This is one I use a whole bunch when clients are hitting blocking issues.

EXEC sp_WhoIsActive

@sort_order = '[blocked_session_count] DESC',

@find_block_leaders = 1,

@get_plans = 1,

@get_task_info = 2,

@get_additional_info = 1,

@output_column_list = '[start_time][session_id][sql_text][query_plan][wait_info][block%][additional_info]';

Here’s what it does:

- Finds which queries have the most blocked sessions under them

- Sort the results by which sessions are doing the most blocking

- Gets additional information about the session, like isolation level, locks, etc.

- Only outputs columns you need to see relevant blocking details

If you don’t have an extended event session set up to capture blocking and stuff like that, this is a great way to get a lot of the same information on the fly.

What You Do

There are probably other variations on this that would be useful to you at various times. These are just to get you started.

You can sort by any one of these columns:

session_id, physical_io, reads, physical_reads, writes, tempdb_allocations,

tempdb_current, CPU, context_switches, used_memory, physical_io_delta, reads_delta,

physical_reads_delta, writes_delta, tempdb_allocations_delta, tempdb_current_delta,

CPU_delta, context_switches_delta, used_memory_delta, tasks, tran_start_time,

open_tran_count, blocking_session_id, blocked_session_count, percent_complete,

host_name, login_name, database_name, start_time, login_time, program_name

Meaning, if you were troubleshooting high CPU, it might make sense to sort by that descending or something.

Thanks for reading!

Going Further

If this is the kind of SQL Server stuff you love learning about, you’ll love my training. I’m offering a 75% discount to my blog readers if you click from here. I’m also available for consulting if you just don’t have time for that, and need to solve database performance problems quickly. You can also get a quick, low cost health check with no phone time required.