Scrabble Words

You spend most of your life waiting. Sometimes it’s not bad. Even a long wait can be okay if you only have to do it once.

Once I waited an hour for a lobster roll. Once.

But let’s face it — it’s a lot different waiting for something you really want. When you’re waiting for a query to finish, it’s probably just to do your job.

That probably isn’t fun, like waiting all year to get a glass of Port Ellen in Seattle only to have PASS get moved to Houston and then have a pandemic break out.

Or something.

Semaphored Kind Of Life

Memory is precious, that’s why VM admins give you so little of it. SQL Server also thinks memory is precious, and will only give certain things so much of it. For instance, it’ll only give Windows a tiny bit, ha ha ha.

Just kidding. Sort of. Set Max Server Memory responsibly, though. Especially if you lock pages in memory.

SQL Server will dedicate memory to two main areas:

- Caching data in the buffer pool

- Giving to queries temporarily to use as scratch space

I posted earlier in the series about what queries use memory for, but to recap:

- Building hash tables

- Sorting data

- Optimized nested loops

- Column store inserts

The most common uses will be hashing and sorting, but you should be aware of the others.

RAM, Limited

It’s “fairly” easy to figure out how big your buffer pool needs to be to deal with your most commonly used data. The real wild card becomes figuring out queries and memory grants.

- SQL Server might make a bad guess for a memory grant

- It might happen because you’ve picked unforgivably large sizes for string columns

- It could make a good guess for one parameter that’s bad for another parameter

- Or it might just be a bad guess all around, even if you picked good sizes for string columns

And any of this could happen at any time, because databases are completely insane places.

Will There Be Enough Room?

When memory grants go wild, either because a small number start getting huge grants, or because a swarm of queries all get small to medium sized grants, they start stealing space from the buffer pool.

Sure, this sucks because as data gets pushed out, you might have to re-read it from disk. If your disks aren’t awful, you might not notice too much strain. Things’ll be a little less snappy, but it might not be the end of the world.

It’s not like you “run out” of buffer pool space, it just turns into a weird gas-station-sushi montage of things going out as fast as they go in. You absolutely can run out of memory grant space, though. And that, my friends, is when the real waiting can happen.

The wait that shows up when you run out of memory to give to queries is called RESOURCE_SEMAPHORE. I demo that happening in a video here. At that link, you’ll also find an easy to run script to help you figure out which queries might be causing those problems.

Rock n’ Roll, Deal With It

There are some interesting ways to fight inflated memory grants. You do need to strike a balance between memory grant sizes and potential spill sizes, but that’s well beyond a blog post. That also assumes that memory grants can’t be properly addressed via other means.

You may hit some of this stuff for reasons that will sound eerily familiar to other potential query fixes.

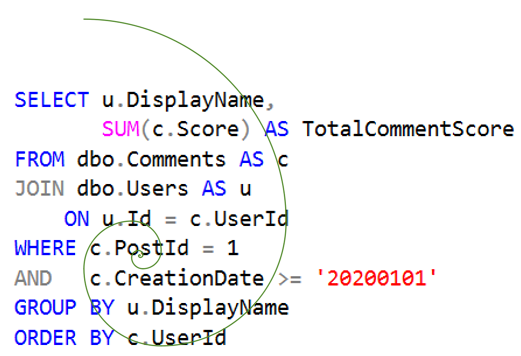

- Are you writing crappy queries that make the optimizer make bad guesses?

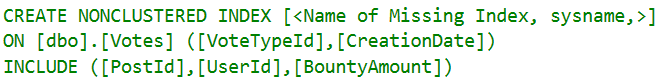

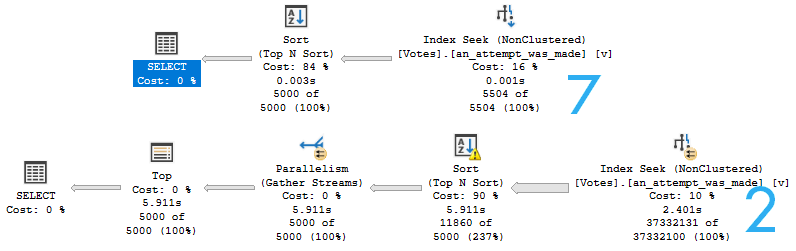

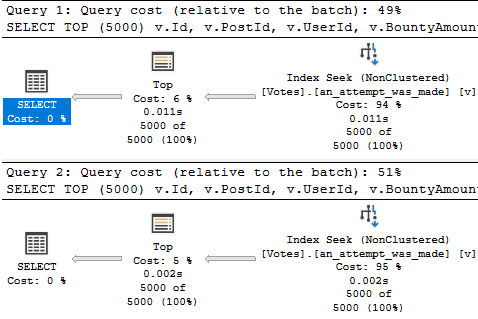

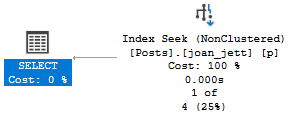

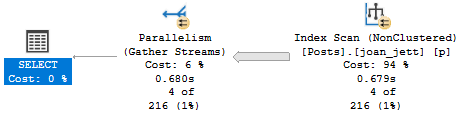

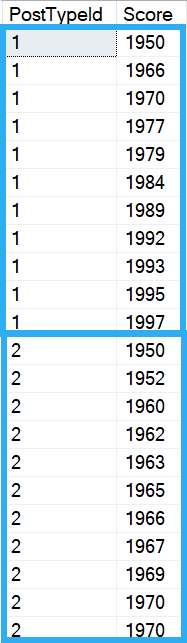

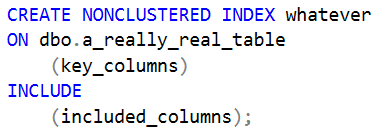

- Do you need to add an index to fix a sort?

- Does adding a better index get you away from a hash join or aggregate?

- Does separating data into relational and informational pieces help?

After that, you’re looking at more heavy-handed fixes, like Resource Governor, or query hints.

If you have a good alternative plan, you may be able to force it with a plan guide, or using Query Store. But that assumes you have a good alternative plan that’s good for a wide range of parameters. And that’s a big assumption.

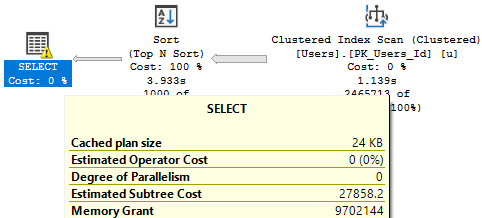

What’s a good place to find information about memory grants? Well, we got those there query plan things, and maybe we should start poking around those.

Thanks for reading!

Going Further

If this is the kind of SQL Server stuff you love learning about, you’ll love my training. I’m offering a 75% discount to my blog readers if you click from here. I’m also available for consulting if you just don’t have time for that and need to solve performance problems quickly.