Historical

I’ve had to help people with this a few times recently, and it’s always a mess. If you’re lucky, you can use a technique like Andy’s to do it, but even this can be complicated by foreign keys, schemabound objects, etc. If your scenario is one big lonely table though, that can be great.

Michael J. Swart has a post where he talks about some of the things you can run into, and Keeping It Canadian™, Aaron Bertrand has a four part series on the subject, too.

Going fully international, Gianluca Sartori and Paul White have also written about the subject.

Now that we have all that covered, let’s talk about where everything falls short: If the identity column is in the primary key, or any other indexes, you still have to drop those to modify the column even if they all have compression enabled.

El Tiablo

For example, if we have this table:

DROP TABLE IF EXISTS dbo.comp_test;

CREATE TABLE dbo.comp_test

(

id int PRIMARY KEY CLUSTERED

WITH (DATA_COMPRESSION = ROW) ,

crap int,

good date,

bad date,

INDEX c (crap, id)

WITH (DATA_COMPRESSION = ROW),

INDEX g (good, id)

WITH (DATA_COMPRESSION = ROW),

INDEX b (bad, id)

WITH (DATA_COMPRESSION = ROW)

);

And we try to alter the id column:

ALTER TABLE dbo.comp_test

ALTER COLUMN id BIGINT NOT NULL

WITH

(

ONLINE = ON

);

We get all these error messages:

Msg 5074, Level 16, State 1, Line 22

The object 'PK__comp_tes__3213E83FF93312D6' is dependent on column 'id'.

Msg 5074, Level 16, State 1, Line 22

The index 'b' is dependent on column 'id'.

Msg 5074, Level 16, State 1, Line 22

The index 'g' is dependent on column 'id'.

Msg 5074, Level 16, State 1, Line 22

The index 'c' is dependent on column 'id'.

Msg 4922, Level 16, State 9, Line 22

ALTER TABLE ALTER COLUMN id failed because one or more objects access this column.

Odds R Us

The chances of you having an identity column on a table that isn’t the PK seems pretty low to me, based on every single database I’ve ever looked at.

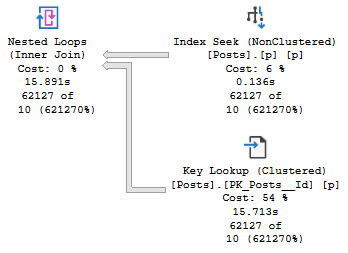

The chances of you being able to drop the Primary Key on a table running over 2 billion rows, alter the column, and then add it back without causing some congestion aren’t so hot. If your database is in an AG or synchronizing data in some other way, you’re in for a bad time with that, too.

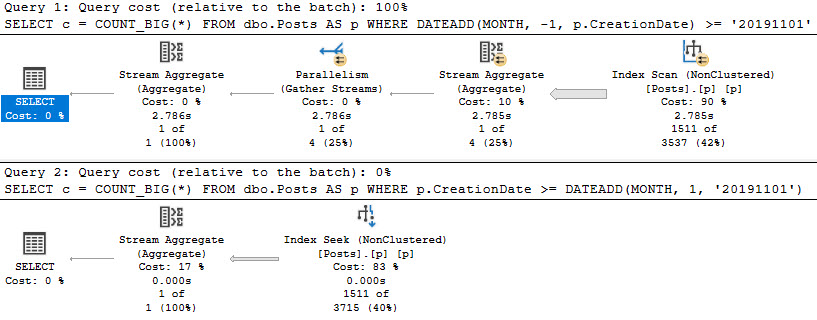

Sure, if you’re on Enterprise Edition, you can drop the Primary Key with ONLINE = ON, but you can’t do that with the nonclustered indexes.

ALTER TABLE dbo.comp_test

DROP CONSTRAINT PK__comp_tes__3213E83FF93312D6

WITH (ONLINE = ON);

That works fine, but, this does not:

DROP INDEX c ON dbo.comp_test WITH (ONLINE = ON);

This error makes our issue clear:

Msg 3745, Level 16, State 1, Line 33

Only a clustered index can be dropped online.

Adding them back with ONLINE = ON is also available in Enterprise Edition, but all the queries that used those indexes are gonna blow chunks because those 2 billion row indexes were probably pretty important to performance.

Partitioning Is Useless

I know, I know. It probably feels like I’m picking on partitioning here. It really wasn’t made for this sort of thing, though.

CREATE PARTITION FUNCTION pf_nope(datetime)

AS RANGE RIGHT

FOR VALUES ('19990101');

CREATE PARTITION SCHEME ps_nope

AS PARTITION pf_nope

ALL TO ([PRIMARY]);

CREATE TABLE dbo.one_switch

(

id integer,

e datetime

) ON ps_nope(e);

CREATE TABLE dbo.two_switch

(

id bigint,

e datetime

) ON ps_nope(e);

In the first table, our id column is an integer, and in the second column is a big integer.

ALTER TABLE dbo.two_switch

SWITCH PARTITION 1

TO dbo.one_switch PARTITION 1;

Leads us to this error message:

Msg 4944, Level 16, State 1, Line 65

ALTER TABLE SWITCH statement failed because column 'id' has data type bigint

in source table 'Crap.dbo.two_switch' which is different from its type int in

target table 'Crap.dbo.one_switch'.

No match, no switch.

What a drag it is getting old.

FIXFIX

Moving from INT to BIGINT is not fun, especially for a change that realistically only needs to apply to new pages.

Changes to old pages (if ever necessary) could be deferred until then, but in the case of columns based on identities or sequences, I can’t think of a realistic scenario where that would even happen.

It would be really nice to have other options for making this change that didn’t seemingly trade complexity for uptime.

Thanks for reading!

Going Further

If this is the kind of SQL Server stuff you love learning about, you’ll love my training. I’m offering a 75% discount to my blog readers if you click from here. I’m also available for consulting if you just don’t have time for that and need to solve performance problems quickly.