Secret Agent Plan

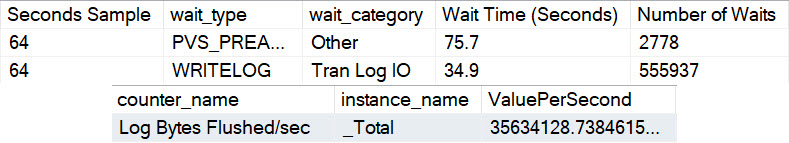

My dislike for implicit transactions is well documented. Recently, while working with a client, I noticed that they had a bunch of them causing blocking.

Digging in a little bit further, I noticed they were all coming from an Agent Job. Of course, by default, Agent runs with a bunch of wacked-out ANSI options.

To get the job to perform better — which it did — it had to make use of a filtered index on an archival task. If you scroll way down in that doc, you’ll see a note:

Review the required SET options for filtered index creation in CREATE INDEX (Transact-SQL) syntax

In order to create, or have queries use your filtered index, they need to have very specific options set correctly.

Baggage

Rather than just setting the required options, which was apparently a lot of typing, someone had just set all the ANSI defaults on.

SET ANSI_DEFAULTS ON;

But this comes with some additional baggage, in the form of implicit transactions. If you run DBCC USEROPTIONS; with that turned on:

Set Option Value ----------------------- -------------- textsize 2147483647 language us_english dateformat mdy datefirst 7 statistics XML SET lock_timeout -1 quoted_identifier SET arithabort SET ansi_null_dflt_on SET ansi_defaults SET ansi_warnings SET ansi_padding SET ansi_nulls SET concat_null_yields_null SET cursor_close_on_commit SET implicit_transactions SET <---- UwU what's this isolation level read committed

It sets all the things you actually need, plus a couple other options for implicit transactions and cursor close on commit.

Baggage

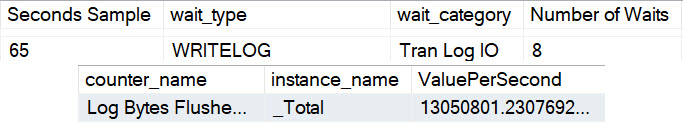

Of course, had someone just done a bit more typing, all would have been well and good.

SET ANSI_NULLS ON; SET ANSI_PADDING ON; SET ANSI_WARNINGS ON; SET ARITHABORT ON; SET CONCAT_NULL_YIELDS_NULL ON; SET QUOTED_IDENTIFIER ON;

Using SET ANSI_DEFAULTS OFF;is equally disappointing, sort of.

Set Option Value ----------------------- -------------- textsize 2147483647 language us_english dateformat mdy datefirst 7 lock_timeout -1 arithabort SET concat_null_yields_null SET isolation level read committed

It really does just flip everything off. Not that I’m saying it shouldn’t — but maybe we need a command in between?

SET ANSI_DEFAULTS BACK_TO_NORMAL; or something.

Whatever “normal” means.

Thanks for reading!

Going Further

If this is the kind of SQL Server stuff you love learning about, you’ll love my training. I’m offering a 75% discount to my blog readers if you click from here. I’m also available for consulting if you just don’t have time for that and need to solve performance problems quickly.