Start To Finish

When I’m working with clients, we look at a lot of stuff together to make sure things are in good working order.

If we’re tuning a single query, the goal is pretty easy to define. We want it to finish as fast as possible.

How you get there is where things may get interesting, but let’s be honest: most of the time you’re going to be fixing the same half a dozen problems in some combination.

Note that the query finishing, and results being finished returning are two different metrics. A query may finish very quickly, but returning results may take considerably longer for various reasons.

You can think of this as responsiveness, and it’s a cornerstone to building something people are happy with. When you’re talking about user experience, performance has to be part of the conversation.

How fast individual queries finish also plays a big part in overall concurrency.

Put Through

Typically this matters more for OLTP workloads. Data warehouses aren’t usually high concurrency environments in that the Batch Requests/Sec counter is sitting around bored. Joe Obbish has a great talk on improving data warehouse loading patterns, because usually isn’t always. Ha ha ha. Barf.

Workloads that do have OLTP characteristics are more sensitive to all sorts of things, and I don’t just mean parameters. Locking and Deadlocking are high up on the list, along with available hardware resources.

Rather obviously, if you want to improve overall throughput, having queries finish as quickly as possible is an attractive goal. You can run way more queries in one second if they take 100ms than if they take 900ms, you know? You know. You’re smart.

Factorials

What are the factors that influence query speed?

Starting in the database:

- Well written queries

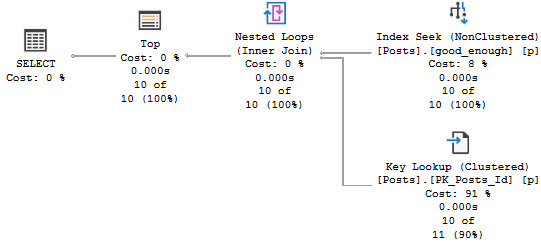

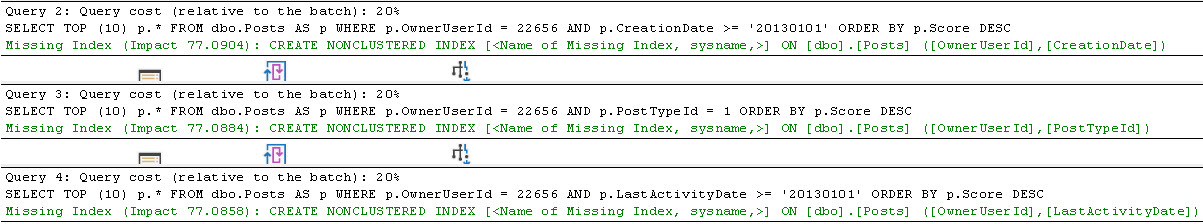

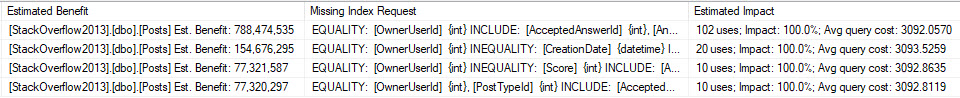

- Thoughtful indexes

- Maintained Statistics

- Correctly stored data types

- Properly normalized tables

Moving out a little bit further, there are some important settings:

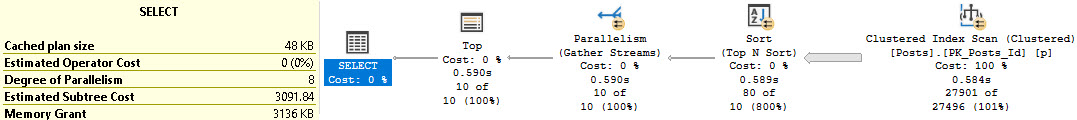

- Parallelism

- Optimistic Isolation Levels

- Max Server Memory

- tempdb stuff

- Instant File Initialization

- Lock Pages In Memory (mostly)

Further out, we have the hardware:

- CPU

- Memory

- Disk

- Network Path To Disks (SAN)

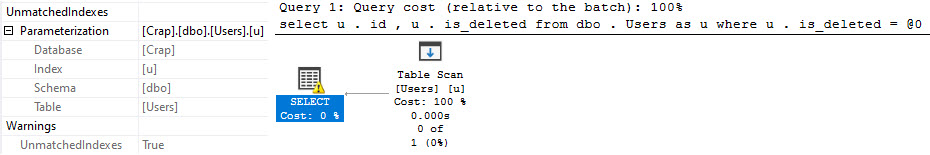

A lot of times something being off in one place can make it look like there’s a bottleneck in another place. Probably the easiest example to conjure up is if you’re missing an opportune nonclustered index, and you end up scanning a clustered index over and over again. The clustered index doesn’t fit in memory, so you end up generating a lot of PAGEIOLATCH_SH waits.

It might look like you have a memory shortage, but in reality you could be making far better use of the memory you have.

Margin For Error

Being able to identify and fix specific bottlenecks is an important skill. Wait stats and other counters can be useful, but are often underwhelming unless you’re watching at the right time, or you have a monitoring tool that logs them for you. Bottlenecks can live in many places, and oftentimes performance tuning is like playing whack-a-mole with them.

Hitting hard limits and running out of CPU or RAM is never fun. Picking hardware that’s up to the task is step one, but staying on top of query and index tuning to make sure it stays adequate for longer is a process that you need to go through. If this is the sort of thing you need help with, drop me a line.

Thanks for reading!

Going Further

If this is the kind of SQL Server stuff you love learning about, you’ll love my training. I’m offering a 75% discount to my blog readers if you click from here. I’m also available for consulting if you just don’t have time for that and need to solve performance problems quickly.