Enough Already

I see people do things like this fairly often with UDFs. I don’t know why. It’s almost like they read a list of best practices and decided the opposite was better.

This is a quite simplified function, but it’s enough to show the bug behavior.

While writing this, I learned that you can’t create a recursive (self-referencing) scalar UDF with the schemabinding option. I don’t know why that is either.

Please note that this behavior has been reported to Microsoft and will be fixed in a future update, though I’m not sure which one.

Swallowing Flies

Let’s take this thing. Let’s take this thing and throw it directly in the trash where it belongs.

CREATE OR ALTER FUNCTION dbo.how_high

(

@i int,

@h int

)

RETURNS int

WITH

RETURNS NULL ON NULL INPUT

AS

BEGIN

SELECT

@i += 1;

IF @i < @h

BEGIN

SET

@i = dbo.how_high(@i, @h);

END;

RETURN @i;

END;

GO

Seriously. You’re asking for a bad time. Don’t do things like this.

Unless you want to pay me to fix them later.

Froided

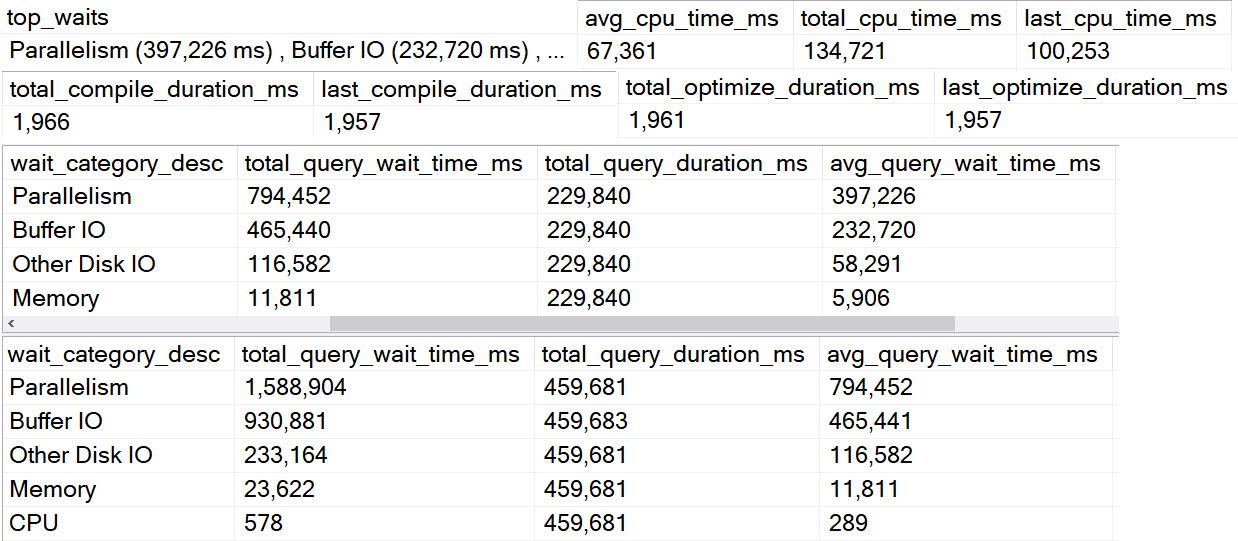

In SQL Server 2019, under compatibility level 150, this is what the behavior looks like currently:

/*

Works

*/

SELECT

dbo.how_high(0, 36) AS how_high;

GO

/*

Fails

*/

SELECT

dbo.how_high(0, 37) AS how_high;

GO

The first execution returns 36 as the final result, and the second query fails with this message:

Msg 217, Level 16, State 1, Line 40 Maximum stored procedure, function, trigger, or view nesting level exceeded (limit 32).

A bit odd that it took 37 loops to exceed the nesting limit of 32.

This is the bug.

Olded

With UDF inlining disabled, a more obvious number of loops is necessary to encounter the error.

/*

Works

*/

SELECT

dbo.how_high(0, 32) AS how_high

OPTION (USE HINT('DISABLE_TSQL_SCALAR_UDF_INLINING'));

GO

/*

Fails

*/

SELECT

dbo.how_high(0, 33) AS how_high

OPTION (USE HINT('DISABLE_TSQL_SCALAR_UDF_INLINING'));

GO

The first run returns 32, and the second run errors out with the same error message as above.

Does It Matter?

It’s a bit hard to imagine someone relying on that behavior, but I found it interesting enough to ask some of the nice folks at Microsoft about, and they confirmed that it shouldn’t happen. Again, it’ll get fixed, but I’m not sure when.

Thanks for reading!

Going Further

If this is the kind of SQL Server stuff you love learning about, you’ll love my training. I’m offering a 75% discount to my blog readers if you click from here. I’m also available for consulting if you just don’t have time for that and need to solve performance problems quickly.