In The Beginning

Or at least at some point, back when I gave all my precious blog blood somewhere else, I used to quite enjoy writing the release notes for the First Responder Kit. It was fun, and there were a lot of contributors to credit.

This most recent release had a note in it that got me taking a stroll down memory lane.

Deprecating sp_BlitzInMemoryOLTP, sp_BlitzQueryStore, and sp_AllNightLog

sp_BlitzQueryStore was originally written by Erik Darling when he worked here. He’s moved on to start his own excellent company, plus his own sp_QuickieStore. You should be using that instead.

sp_BlitzInMemoryOLTP was always kinda distributed as a courtesy – the real home for it is in KTaranov’s Github repository, and you can still find it there. It hasn’t been updated in over 6 years, and I’ve never seen anyone using it, so I’m removing it to streamline support issues.

sp_AllNightLog was a ton of fun when we built it several years ago, but it’s consistently had a problem. Companies start using it, then decide they want to build something even more ambitious, typically a C# service with robust error handling and scheduling. sp_AllNightLog isn’t the kind of thing I want to encourage beginners to use – it’s complex.

I didn’t have much to do with sp_BlitzInMemoryOLTP. I’m still not entirely sure what it does. All I know is that In-Memory was the hottest frog in the pan for exactly 14.9 minutes.

But I have some quite fond memories of building sp_BlitzQueryStore, and sp_AllNightLog.

I didn’t write every bit of code in either one, but I definitely started work on and them pitched in quite a bit on both. Other contributors deserve whatever credit they’re publicly willing to take.

If you’ll permit a fella with a lot more grey on his face than there was when these two procedures were first F5 birthed into the world to reminisce a bit, I would like to eulogize them here.

sp_BlitzQueryStore

I had been working on sp_BlitzCache just about full time for a couple years, since Jeremiah (who wrote it originally) had decided to embark on a professorial career. When Query Store got announced, I knew I wanted to write something for it.

After all, this seemed like a no-brainer for folks on SQL Server 2016 to adopt. I just had no concept of what I wanted to do, until one day…

I had just gotten off the phone with the worst credit card company in the world, because someone had purchased ONE-HUNDRED $99 Play Station gift cards from a Russian IP address with a .ru email, and they told me that I would need to fill out 100 dispute PDFs to dispute each charge separately.

I forget where I was walking home from, but I was in the company Slack, and I had a message from BrentO asking how I wanted to approach it, and I felt like I had to make something good up on the spot. It turned out to be: I want to find all of the worst metric spikes, and grab the queries that ran during them. So it would look for the highest CPU, reads, writes, memory, tempdb, etc. and look for the queries responsible for them. And since we have all this groovy historical data, I wanted to show which queries were sensitive to parameter sensitivity by looking for wild swings in those metrics.

In theory, this was a great idea. In practice, those queries were god awful slow. It wasn’t all my fault, of course; I can’t take full credit. I see a lot of scripts (including queries from the SSMS GUI) that hit Query Store which are equally as God awful slow.

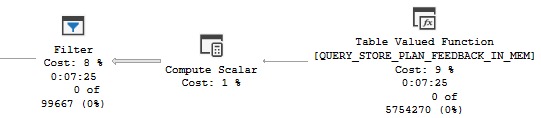

Perhaps ironically, some of the absolute slowest points in any Query Store query are the ones that hit the “in memory” tables.

At any rate, SQL Server 2016 adoption was fairly slow, and Query Store adoption was even slower. It was even hard to recommend turning it on at first because of all the bugs and issues that were cropping up and getting fixed in CUs (and even then, Service Packs). SQL Server 2017 didn’t help things at all, and I was out on my own in the world by the time SQL Server 2019 got released.

So poor ol’ sp_BlitzQueryStore languished a bit. Of course, as I added checks and gizmos to sp_BlitzCache, I’d also add them to sp_BlitzQueryStore, but… It just wasn’t the same every day utility belt tool for me.

When I sort of lost faith in the whole thing was sometime in 2018 when I tweaked a query in sp_BlitzQueryStore to try to speed things up, and it made my local SQL instance stack dump, and I had to manually restart it. If that happened with a client, hoo boy!

But here’s to you, sp_BlitzQueryStore! It’s how I first started learning the Query Store DMVs, how they related, and what data was in them.

You’re like that long-term relationship that ends before you meet the person you end up marrying.

sp_AllNightLog

This made me feel cool, because:

- I’d always loved Log Shipping (still hate AGs)

- It was my first “programming” stored procedure

Let me clarify point 2 a bit, because I’m not one of those “SQL isn’t coding” people. Most things that I write only work with data. This worked with REAL LIVE FILES. Writing them. Copying them. Restoring them. Across two different servers. Where neither one knew the other existed.

Wild. WILD!

I’m not sure if I’m allowed to say the name of the company that wanted it, but they were based in Chicago, so I ended up flying out there a couple times to work on it along with Brent.

That kind of stuff had never happened to me before, and has only happened a couple times since.

I learned some hard lessons from this one:

- If you fat-finger an invalid date value in an output parameter, you’ll end up with a REALLY HARD BUG TO FIND

- If you don’t add a WAITFOR to looping code that’s constantly looking for new databases, new backups to take, and new backups to restore, you can really drive one CPU crazy

It was also when I learned that you can’t add triggers to “system” tables, like restorehistory, in the dbo schema, in msdb. If we could have done that, a few things would have been way easier.

Of course, my fondest memory of this one was when it finally worked. I remember hitting a bazillion errors and issues and debugging stuff for ages. And then one magical day, the Agent jobs started up, and it was all green.

I was far from alone in working on it; I don’t want it to sound like THIS ONE’S ALL ME. There were a group of 3-4 people who put in work writing and testing things.

That was kind of the nicest thing about it — real collaboration with real people in real life — not just pushing changes around the internet and asking people to test them.

The Departed

While it is a bit sad to see them go, I totally understand why they had to. It’s difficult to be responsible for large code repos that you don’t use a lot, and have become unfamiliar with because you don’t use them regularly.

I’m a bit surprised that sp_BlitzBackups didn’t also end up in the deprecation pile. It hasn’t had an issue opened since 2019, or any commits aside from version bumps. But maybe it’s just that well-written! If that ever does get deprecated, I have exactly one funny memory of the writing process, and it probably won’t get a blog post. I can spare you the drama of “it runs really slow when there are hundreds of databases” and “how slow?” and “like a minute” and “why do you need to run it every 15 seconds anyway?”.

Perhaps the most surprising thing about the years since 2016 is that not a single third party monitoring tool has embraced Query Store data in their performance diagnostics. Hopefully someday someone tells them about it, I guess?

But hey, that’s enough about that! Go get the most recent release of the First Responder Kit because it has a bunch of new and improved in it. Enjoy your shinies while they last.

Thanks for reading!

Going Further

If this is the kind of SQL Server stuff you love learning about, you’ll love my training. I’m offering a 75% discount to my blog readers if you click from here. I’m also available for consulting if you just don’t have time for that, and need to solve database performance problems quickly. You can also get a quick, low cost health check with no phone time required.

“Perhaps the most surprising thing about the years since 2016 is that not a single third party monitoring tool has embraced Query Store”

It’s almost like it’s some combination of hard to use, low adoption, and limited value.

Well, I agree with the first two, but I do get a lot of value out of the data for my purposes.

It could be better, of course, but everything could be better, except the first Motley Crue album.

“I have exactly one funny memory of the writing process, and it probably won’t get a blog post”

You can’t say that and not tell us! 😀

I did! It’s in the same paragraph!

Ha okay! I took the phrase “I can spare you” to mean that that wasn’t the thing 😀

Interesting read by the way, thanks!

I don’t really understand 3rd parry monitoring not using the already collected data in Query Store. Maybe it’s because they can’t rely on it being turned on for every database, or at all, so they make a product that doesn’t need it. I suppose if it was on for some databases and not others, that also might be challenging to merge query store data with data collected in other ways.

It wouldn’t take much to check if it’s turned on and collect data from there. How many times have you looked at a monitoring tool and had a missing query plan? Kind of a shame to not use all available resources.

Sooooo, is this your nostalgic version ? It’s always interesting to read about pieces of history, even when it comes to SQL Server scripts.

Of course, thank you for all your work, commitment and dedication !

Hahaha, thanks. Yeah, you know, developing stuff like this has plenty of frustration and aggravation alongside the good memories. I don’t need to tell most people about the annoying parts of getting XML queries to work correctly.