Like vim, I Can’t Quit You

I’m going to be honest with you, I have no idea how most people who use sp_BlitzCache run it.

Most people I talk to are like “oh, that’s not sp_Blitz?”

Ahem.

Anyway, I’m going to show you a cool way to look at your plan cache a little bit more differenter than usual.

Average The Life

When you run it and use @SortOrder, it will sort by the “total” column of whichever metric you pick. CPU, Reads, Duration, Writes — you get the picture.

But you can also run it to sort by what uses the most of a metric on average.

Sure, totals point out what runs a lot — but things that run a lot might not have much to tune.

You can make a note like “Should we be caching this?” for you developers to laugh at.

Here are some examples:

EXEC sp_BlitzCache @SortOrder = 'avg cpu'; EXEC sp_BlitzCache @SortOrder = 'avg duration'; EXEC sp_BlitzCache @SortOrder = 'avg reads'; EXEC sp_BlitzCache @SortOrder = 'avg writes'; EXEC sp_BlitzCache @SortOrder = 'avg spills'; EXEC sp_BlitzCache @SortOrder = 'memory grant';

No, memory grant isn’t an average. But it can show you some real bangers.

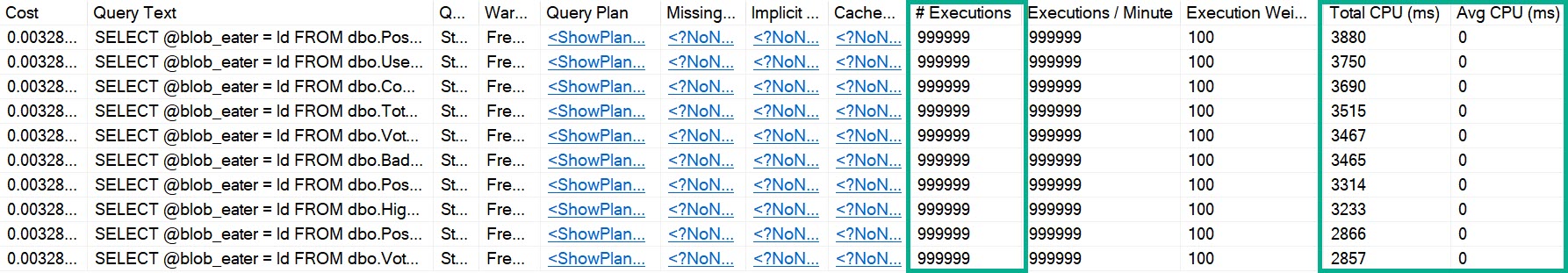

Here’s an example of why you should use those average sort orders:

EXEC sp_BlitzCache @SortOrder = 'cpu'

Those queries all executed a whole bunch. They racked up a bunch of total CPU time.

But looking at any of those execution plans, aside from not running the query so much, there’s nothing really to tune.

But if we look at the plan cache by averages…

EXEC sp_BlitzCache @SortOrder = 'avg cpu'

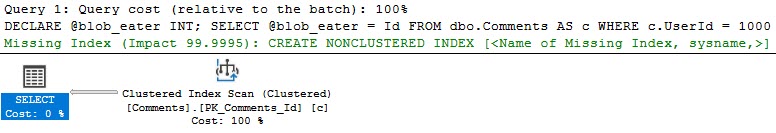

We get… Alright, look. Those queries all have recompile hints. They still show up.

But the top one is interesting! It has way higher average CPU than the rest.

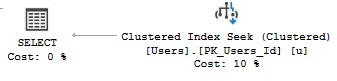

This query plan is a little bit different. It’s scanning the clustered index rather than seeking, and it’s got a missing index request.

In total, it wasn’t using a lot of CPU compared to other queries, but on average it was a lot suckier.

SQL University

I always look at the averages, because you can find some really interesting plans in there.

Sure, you might find some one-off stuff that you can ignore, but that’s what @MinimumExecutionCount is for.

You did read the documentation, didn’t you?

Queries that use a lot of resources on average often stand a good chance at being tuned, where queries that just use a lot of resources because of how frequently they run may not.

Anyway, that’ll be $1.

Thanks for reading!

Going Further

If this is the kind of SQL Server stuff you love learning about, you’ll love my training. I’m offering a 75% discount to my blog readers if you click from here. I’m also available for consulting if you just don’t have time for that and need to solve performance problems quickly.

Related Posts

- Cursor Declarations That Use LOB Local Variables Can Bloat Your Plan Cache

- Another Method to Reduce Temp Table Plan Cache Pollution

- SQL Server Community Tools: Capturing Which Queries Are Recompiling And Why With sp_HumanEvents

- SQL Server Community Tools: Capturing Which Queries Are Compiling With sp_HumanEvents

Hey Erik. First, thanks for giving back to our field – your insights are needed, and very appreciated. Question.. What’s the best way to get the *full* sql_text for problem queries returned by sp_BlitzCache? I work in a shop with a ton of monster procs and queries that are routinely truncated in the [sql_text] output field. Hoping that you have a shareable technique for viewing all of the code. Thanks for any feedback.

If it’s coming from a proc, and BlitzCache returns the proc name, why not just use sp_helptext or script the proc from the database?

As far as the text being cut off, even SSMS has a limitation of 65535 characters in a grid field. That’s not something I can really change ?

Thanks. I was thinking it was an sp_BlitzCache limitation (which might be remedied with a parameter tweak) as opposed to an SSMS limitation. Gotta start with the obvious ?