Update Stats, They Said

Okay look, you probably should update stats. At least when you do it, you have some control over the situation.

If you let SQL Server get up to its own devices, you might become quite surprised.

One after-effect of updated stats is, potentially, query plan invalidation. When that happens, SQL Server might get hard to work coming up with a new plan that makes sense based on these new statistics.

And that, dear friends, is where things can go bad.

New Contributor ?

Let’s say we have this query, which returns the average post and comment score for a single user.

CREATE OR ALTER PROCEDURE dbo.AveragePostScore(@UserId INT)

AS

BEGIN

SET NOCOUNT, XACT_ABORT ON;

SELECT u.DisplayName,

AVG(p.Score * 1.) AS lmao_p,

AVG(c.Score * 1.) AS lmao_c

FROM dbo.Users AS u

JOIN dbo.Posts AS p

ON p.OwnerUserId = u.Id

JOIN dbo.Comments AS c

ON c.UserId = u.Id

WHERE u.Id = @UserId

GROUP BY u.DisplayName;

END;

GO

Most of the time, the query runs fast enough for the occasional run to not end too poorly.

But then a recompile happens, and a new contributor decides to look at their profile.

Okay To Worse

What comes next you could fill a textbook with.

EXEC dbo.AveragePostScore @UserId = 3150367;

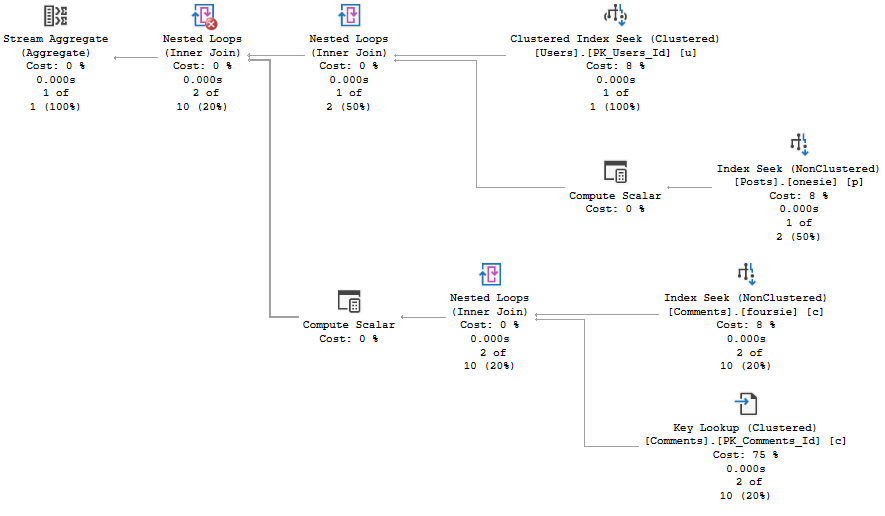

A new plan gets compiled:

And you know, it looks great for a new user.

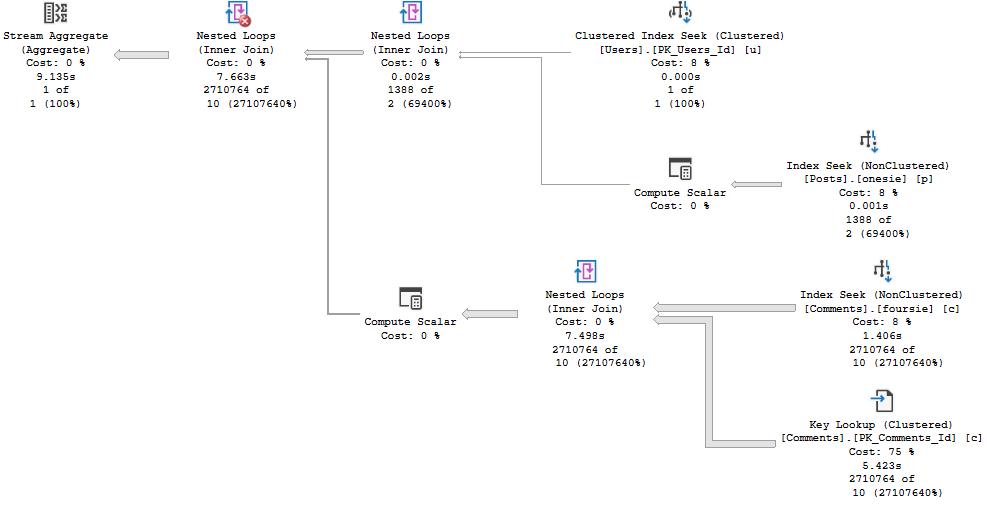

And you know, it looks not so great for a slightly more seasoned user.

So What Changed?

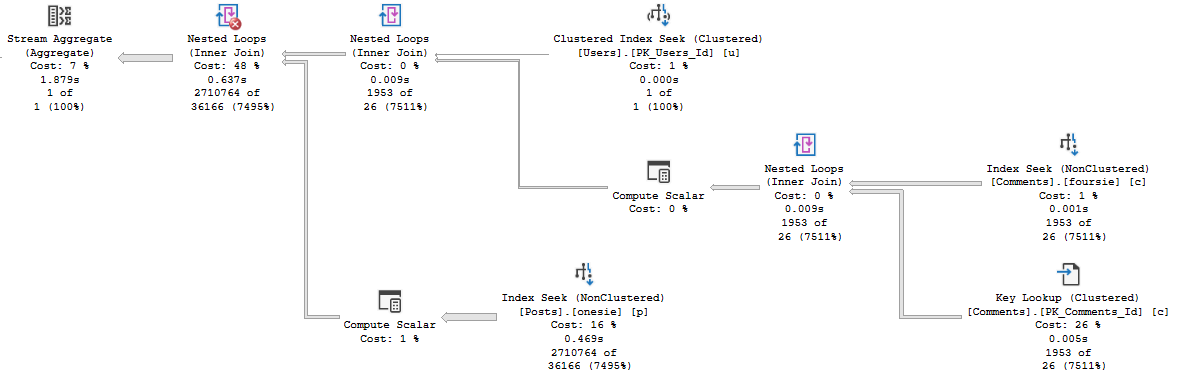

Running the query first for a user with a bit more site history gives us a plan with a very different shape, that finishes in under 2 seconds. Repeating that plan for less experienced users doesn’t cause any problems. It finishes in very little time at all.

The plan itself remains largely more familiar than most parameter sniffing scenarios wind up. There are plenty more similarities than differences. It really does just come down to join order here.

Alright, now we know what happened. How would we figure out if this happened to us IRL?

I Shot The Trouble

We can do what we did yesterday, and run sp_BlitzFirst. That’ll warn us if stats recently got updated.

EXEC sp_BlitzFirst @Seconds = 10, @ExpertMode = 1;

If we want to try to validate if that stats update messed with a plan, we can use sp_WhoIsActive:

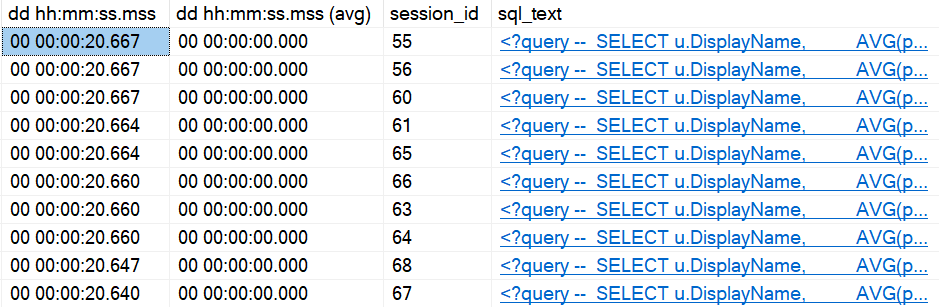

EXEC sp_WhoIsActive @get_plans = 1, @get_avg_time = 1;

The get_avg_time parameter is one I’ve talked about before, and in this case it’s quite helpful.

We’ve got a bunch of queries that usually run in 0 seconds running for at least 20 seconds.

Fingerprinted

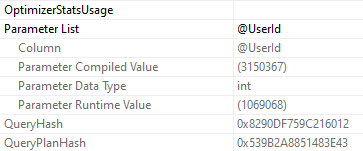

Since we used get_plans here too, we can grab the execution plan, and see which parameters were used on compilation and execution.

Get the properties of the select operator, either by right clicking and selecting properties, or hitting F4.

Now we know two things, and can test four things:

- If we run this query using the compiled value, is it fast?

- If we run this query using the runtime value, is it slow?

- If we recompile and run it for the runtime value, is it fast or slow?

- Is the compile time value still fast when it uses the “new” plan?

This is a little easier with stored procedures, because you can do something like:

EXEC sys.sp_recompile @objname = N'dbo.AveragePostScore';

To use DBCC FREEPROCCACHE to target a specific query, you need the sql handle or plan handle. You don’t wanna jump off and clear the whole cache here, unless you’re desperate. Just make sure you understand that you might fix one query, and break others, if you clear the whole thing.

It’s better to be targeted when possible.

And of course, if you’ve got Query Store up and running, you may do well to look at Regressed or High Variance query views there, and force the faster plan.

Thanks for reading!

Going Further

If this is the kind of SQL Server stuff you love learning about, you’ll love my training. I’m offering a 75% discount to my blog readers if you click from here. I’m also available for consulting if you just don’t have time for that and need to solve performance problems quickly.