Quickly, man!

I’m still looking into this a bit, but I ran into this issue when helping set up a process to migrate data to a table because the original table was running out of integer identity values.

The process looks something like:

- Create a new table with the right definition

- Write a loop to backfill existing table data

- Create a trigger to keep updated data synchronized

- Eventually swap the tables during a short maintenance window

All well and good! Except… You can end up with a really weird execution plan for the backfill process, and some pretty gnarly memory grants for wide tables.

Demonstrating with the Votes table:

CREATE TABLE

dbo.Votes_bigint

(

Id bigint IDENTITY NOT NULL,

PostId int NOT NULL,

UserId int NULL,

BountyAmount int NULL,

VoteTypeId int NOT NULL,

CreationDate datetime NOT NULL,

CONSTRAINT PK_Votes_bigint_Id

PRIMARY KEY CLUSTERED (Id ASC)

)

GO

INSERT

dbo.Votes_bigint

(

Id,

PostId,

UserId,

BountyAmount,

VoteTypeId,

CreationDate

)

SELECT TOP (100000)

v.Id,

v.PostId,

v.UserId,

v.BountyAmount,

v.VoteTypeId,

v.CreationDate

FROM dbo.Votes AS v

ORDER BY v.Id;

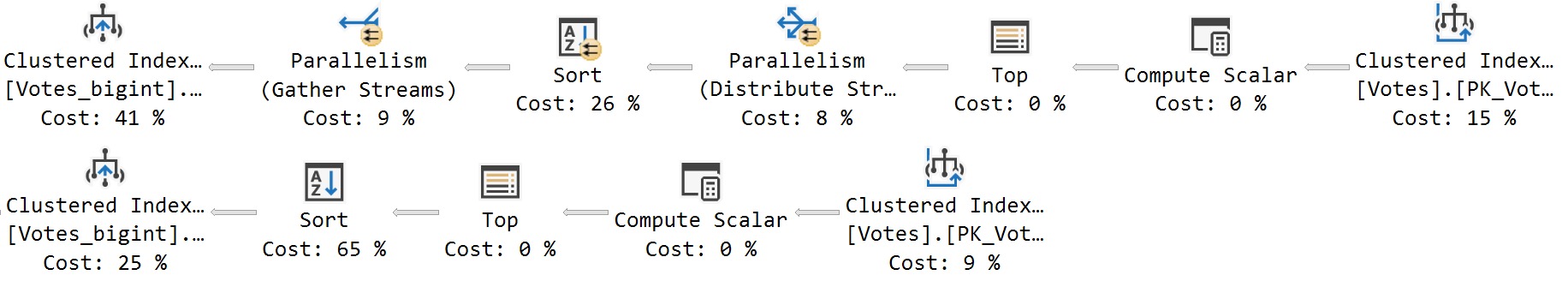

The query plan has a Sort in it, which is weird because… Both tables are primary key/clustered index on the same column. Why re-sort that data?

I’m showing both serial and parallel plans:

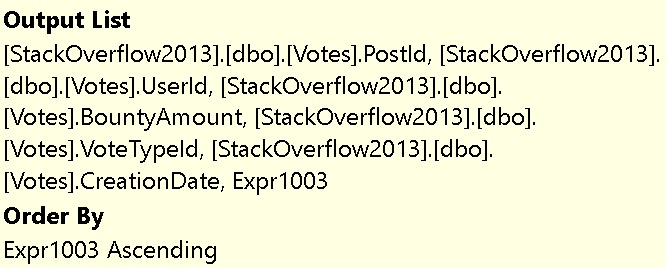

The Sort is always on this expression:

Which in the XML looks like this:

[StackOverflow2013].[dbo].[Votes_bigint].[Id] = [Expr1003]

Thanks for reading!

Going Further

If this is the kind of SQL Server stuff you love learning about, you’ll love my training. I’m offering a 75% discount to my blog readers if you click from here. I’m also available for consulting if you just don’t have time for that, and need to solve database performance problems quickly. You can also get a quick, low cost health check with no phone time required.

I bet it’s the compute scalar that is causing it – the data type conversion. The optimizer probably isn’t smart enough to know that INT is a subset of BIGINT. Given the high percentage cost of those sorts, you’d think this would be an easy win for the optimizer team to look at.

Yep, that’d be nice.

Thanks for great content as always! I believe there is a typo on the code, and Id field should be BIGINT right ?

Ooh you’re right! Fixed.

In my test, it is due to Id column IDENTITY attribute. If you remove it , the sort it is not needed. Checking the Column Scalar properties, seems IDENTITY add some “internal” function, probably non deterministic, therefore need a short to ensure order.

Right, but if the point is to replace the table and maintain the same behavior, it’d be really painful to add the identity attribute to the column later. I guess you could use a sequence instead, but I’m not sure you’d get different behavior.

Not adding PRIMARY KEY Constraint, seems to eliminate the sort as well. So it may be worth, to eventually swap the tables then alter the table to add primary key constraint.

If I’m moving from an int to a bigint because I’m running out of int values, how do you think adding a primary key after swapping in ~2 billion rows is going to turn out?

Right, so that “little” sort doesn’t look that bad after all !

It’s not always “little”, which is why I wrote this post.

Do you find trace flag 8795 helps at all

Yeah, pesky DML Sort.

That’s hell! Do it in the maintenance windows all together! Why did you use a trigger? Let’s do it in the window! 😉

If I could have my wish, it would be that migrations from int to bigint (or any smaller integer type to a larger one) are metadata-only change. Similar to how adding a nullable column is a metadata-only change. I’m not sure what technical challenges would be involved.

By a large coincidence this month, we also found ourselves migrating a table that had also run out of integer space. So this scenario can’t be that rare.

We were able to keep the table(s) online by taking a very brief SCH-M lock to do the table-switch:

https://michaeljswart.com/2012/04/modifying-tables-online-part-5-just-one-more-thing/

Great content as usual Erik, but out of curiosity… Why here is not an option to use the “famous” spaghettiDBA ( Gianluca Sartori ) method : enabling row compression on the table, and then altering the column to bigint in one shot ? LINK : https://www.brentozar.com/archive/2020/04/video-altering-datatypes-with-almost-no-downtime/

That only works in very limited scenarios. I haven’t been able to use it in real-life once.