Columnstore has quite a few different tricks for compressing data. This blog post explores if it’s possible for a rowstore table to beat columnstore compression, even in the best case scenario for the columnstore table (no delta stores and rowgropus of the maximum size).

Page Compression and String Data

Everybody knows that strings aren’t the best fit for columnstore tables. Let’s start by puting sequential integers from 1 to 1048576 into a page compressed table:

DROP TABLE IF EXISTS dbo.RS_VARCHAR33; GO CREATE TABLE dbo.RS_VARCHAR33 ( ID1 VARCHAR(33) ) WITH (DATA_COMPRESSION = PAGE); INSERT INTO dbo.RS_VARCHAR33 WITH (TABLOCK) SELECT TOP (1048576) ROW_NUMBER() OVER (ORDER BY (SELECT NULL)) ID FROM master..spt_values t1 CROSS JOIN master..spt_values t2 OPTION (MAXDOP 1);

According to sp_spaceused, the table takes up 11464 KB of space. Next load the same data into a columnstore table:

DROP TABLE IF EXISTS dbo.CCI_VARCHAR33; GO CREATE TABLE dbo.CCI_VARCHAR33 ( ID1 VARCHAR(33), INDEX C CLUSTERED COLUMNSTORE ); INSERT INTO dbo.CCI_VARCHAR33 WITH (TABLOCK) SELECT TOP (1048576) ROW_NUMBER() OVER (ORDER BY (SELECT NULL)) ID FROM master..spt_values t1 CROSS JOIN master..spt_values t2 OPTION (MAXDOP 1);

The CCI takes up 14408 KB of space. The majority of the space is used for the dictionary. The follow query returns 11495348 bytes:

SELECT csd.on_disk_size

FROM sys.column_store_dictionaries csd

INNER JOIN sys.partitions sp

ON csd.partition_id = sp.partition_id

WHERE sp.[object_id] = OBJECT_ID('CCI_VARCHAR33');

Data types matter here. If I change the CCI to have a VARCHAR(32) column instead then the table only requires 1800 KB of space. A dictionary is not created in this case. A takeaway is that SQL Server may choose to create a dictionary based on the length of the defined string column. Data sets with many unique strings may require a relatively large amount of space for the dictionary, even to the point where page compressed data can have overall a lower footprint on the database.

Page Compression and Non-string Data

It’s certainly more difficult to come up with a demo that works without string columns, but consider how the page compression algorithm works. Data can be compressed on page basis, which includes both multiple rows and multiple columns. That means that page compression can achieve a higher compression ratio when a row has identical values in different columns. Columnstore is only able to compress on an individual column basis and you won’t directly see better compression with repeated values in different columns for a single row (as far as I know).

The table defined and populated below requires 11912 KB of disk space:

DROP TABLE IF EXISTS dbo.RS_4_COLUMN; GO CREATE TABLE dbo.RS_4_COLUMN ( ID1 BIGINT, ID2 BIGINT, ID3 BIGINT, ID4 BIGINT ) WITH (DATA_COMPRESSION = PAGE); INSERT INTO dbo.RS_4_COLUMN WITH (TABLOCK) SELECT ID, ID, ID, ID FROM ( SELECT TOP (1048576) ROW_NUMBER() OVER (ORDER BY (SELECT NULL)) / 10 ID FROM master..spt_values t1 CROSS JOIN master..spt_values t2 ) q OPTION (MAXDOP 1);

If I rerun the code with five columns, the table now takes up 13000 KB of space. That’s only an increase of 9% in space to hold 25% more data. The same data loaded into a columnstore table with four columns takes 11272 KB of space. Adding one column results in a total size of 14088 KB, which is almost exactly a 25% increase. For this data set with five columns, page compression across rows is more efficient than standard columnstore compression.

Row Compression and Non-string Data

Row compression doesn’t allow for compression benefits from storing the same value in multiple columns. Is it possible to beat columnstore compression with row compression without string columns? You betcha!

DROP TABLE IF EXISTS #BATCH_MODE; CREATE TABLE #BATCH_MODE ( ID INT, INDEX C CLUSTERED COLUMNSTORE ); DROP TABLE IF EXISTS dbo.RS_2_COLUMN_ROW_COMPRESSED; GO CREATE TABLE dbo.RS_2_COLUMN_ROW_COMPRESSED ( ID1 BIGINT, ID2 BIGINT ) WITH (DATA_COMPRESSION = ROW); INSERT INTO dbo.RS_2_COLUMN_ROW_COMPRESSED WITH (TABLOCK) SELECT SUM(q.ID) OVER (ORDER BY q.ID) , SUM(q.ID) OVER (ORDER BY q.ID) FROM ( SELECT TOP (1048576) ROW_NUMBER() OVER (ORDER BY (SELECT NULL)) ID FROM master..spt_values t1 CROSS JOIN master..spt_values t2 ) q LEFT OUTER JOIN #BATCH_MODE ON 1 = 0 OPTION (MAXDOP 1); EXEC sp_spaceused 'RS_2_COLUMN_ROW_COMPRESSED'; DROP TABLE IF EXISTS #BATCH_MODE; CREATE TABLE #BATCH_MODE ( ID INT, INDEX C CLUSTERED COLUMNSTORE ); DROP TABLE IF EXISTS dbo.CCI_2_COLUMN; GO CREATE TABLE dbo.CCI_2_COLUMN ( ID1 BIGINT, ID2 BIGINT, INDEX C CLUSTERED COLUMNSTORE ); INSERT INTO dbo.CCI_2_COLUMN WITH (TABLOCK) SELECT SUM(q.ID) OVER (ORDER BY q.ID) , SUM(q.ID) OVER (ORDER BY q.ID) FROM ( SELECT TOP (1048576) ROW_NUMBER() OVER (ORDER BY (SELECT NULL)) ID FROM master..spt_values t1 CROSS JOIN master..spt_values t2 ) q LEFT OUTER JOIN #BATCH_MODE ON 1 = 0 OPTION (MAXDOP 1); EXEC sp_spaceused 'CCI_2_COLUMN';

In this example, the rowstore table has a total size of 15496 KB but the columnstore has a total size of 16840 KB. I’m honestly not sure why this happens. I did try to pick the unfriendliest data set to columnstore compression that I could: no repeated values and no obvious patterns in data. Perhaps there’s some additional overhead of compression that pushes it over the row compressed data.

Final Thoughts

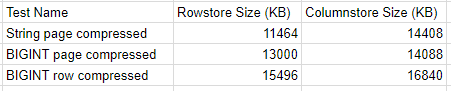

Columnstore doesn’t guarantee better compression than rowstore, even with perfectly sized rowgroups. Rowstore can provide better compression ratios for string columns, tables with repeated values across columns, and in other uncommon scenarios. A summary of test results is here:

The differences are small per rowgroup, but they can add up as more columns and more rows are added to the tables. I’ve seen tables in the real world that ended up bigger as columnstore compared to rowstore, which is what inspired me to look for some of these examples.

Thanks for reading!

Going Further

If this is the kind of SQL Server stuff you love learning about, you’ll love my training. I’m offering a 75% discount to my blog readers if you click from here. I’m also available for consulting if you just don’t have time for that and need to solve performance problems quickly.