Consider the following code that calls SYSDATETIME() 10 million times in a loop:

GO CREATE OR ALTER PROCEDURE #p_local AS BEGIN SET NOCOUNT ON; DECLARE @dummy DATETIME2(7), @loops INT = 0; WHILE @loops <= 10000000 BEGIN SET @dummy = SYSDATETIME(); SET @loops = @loops + 1; END; END; GO EXEC #p_local;

On my machine the code takes about 11.6 seconds to execute. Replacing SYSDATETIME() with SYSUTCDATETIME() makes the code take only 4.3 seconds to execute. Why is SYSUTCDATETIME() so much faster than SYSDATETIME()?

It’s always a CPU problem

Both while loops drive a CPU core to 100% while executing. Within SQL server, elapsed time nearly equals CPU. For this code, I wouldn’t expect waits, latches, or spinlocks to reveal any clues. Query plans, execution stats, and other DMVs are unlikely to help as well. One thing that could help is information about the internals of SYSDATETIME() and SYSUTCDATETIME(). There’s a little bit on books online:

SQL Server obtains the date and time values by using the GetSystemTimeAsFileTime() Windows API. The accuracy depends on the computer hardware and version of Windows on which the instance of SQL Server is running. The precision of this API is fixed at 100 nanoseconds. The accuracy can be determined by using the GetSystemTimeAdjustment() Windows API.

Probably all of the useful information is likely locked behind the vault at Microsoft. Fortunately, both SQL Server and the guest OS are happy to give us information about what SQL Server is using its CPU to do if we have the right public symbols and ask nicely. I know of four primary ways of doing this:

- A True Professional could use WinDbg (the cool kids pronounce it “wind bag”) or another debugger to step through the code of both functions. I don’t have the skills or patience to do that.

- DBCC STACKDUMP or sqldumper.exe can be used to create a filtered memory dump on demand. The problem with this approach is that it only provides a single snapshot and our functions execute very quickly.

- Extended events can provide callstacks for many events. I’m not aware of an extended event that would fire at the right times for this code.

- ETW tracing can provide a summary of call stacks during a sampled time period. Common choices are Windows Performance Recorder or PerfView.

Option 4 is exactly what we need. We can run a single query at a time that performs uniform work and we want to see where CPU time is spent during the entire query’s execution. My tool of choice is PerfView.

Revealing SQL Server’s secrets

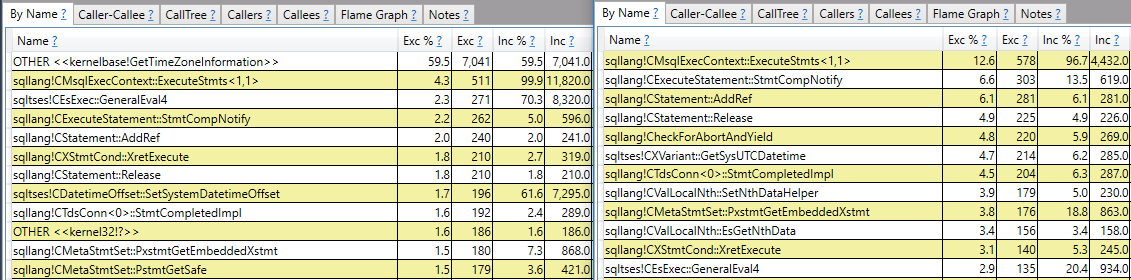

I used PerfView’s default settings and collected ETW profile data separately during both while loops. After resolving symbols and changing folding to 0, there’s a clear difference between SYSDATETIME() (on the left) and SYSUTCDATETIME() (on the right):

kernelbase!GetTimeZoneInformation shows up with a high percentage for exclusive time for SYSDATETIME(). It does not show up at all for SYSUTCDATETIME(). In case it helps, here’s documention for a few of the column names:

Exc – The amount of cost (msec of CPU time) that can be attributed to the particular method itself (not any of its callees)

Exc % – The exclusive cost expressed as a percentage of the total cost of all samples.

Inc – The cost associated with this node as well as all its children (callees) recursively. The inclusive cost of the ROOT contains all costs.

Inc % – The inclusive cost expressed as a percentage of the total cost of all samples (will be 100% for the ROOT node)

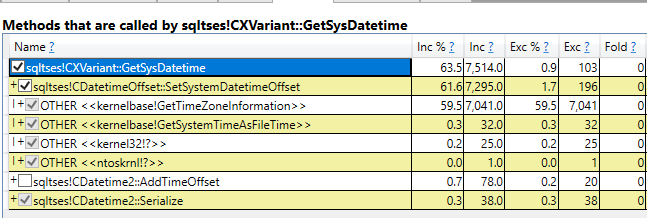

sqltses!CXVariant::GetSysDatetime sounds an awful lot like SYSDATETIME() to me. It also shows up in the right place in the stack to be a match. Drilling into the methods are called by it:

Note that the call to kernelbase!GetTimeZoneInformation takes up nearly all CPU time. There’s also a call to kernelbase!GetSystemTimeAsFileTime that barely shows up as well.

Sharp-eyed readers might be wondering if SYSDATETIMEOFFSET() results in calls to sqltses!CDatetimeOffset::SetSystemDatetimeOffset. The answer is yes, but this is left as an exercise for the reader.

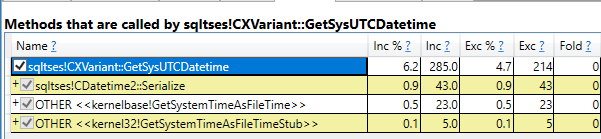

Again, sqltses!CXVariant::GetSysUTCDatetime sounds like awful lot like SYSUTCDATETIME() to me. Drilling into the methods are called by it:

Note the low percentage of inclusive time for the call. SYSUTCDATETIME() is so cheap to call that most of the CPU time is spent executing the loop code instead of that function. kernelbase!GetTimeZoneInformation does not show up at all. There’s is still a call to kernelbase!GetSystemTimeAsFileTime that barely shows up.

This is probably an oversimplification, but I think it’s fair to say that each call to SYSDATETIME() needs to get time zone information. This results in a call to kernelbase!GetTimeZoneInformation that is relatively expensive. There is no need to call that method for SYSUTCDATETIME().

Does this matter? It depends™

Database folks like answering questions with “it depends”. Regrettably, they don’t always provide sufficient or accurate details about what it depends on. I strive to not fall into that trap. For many queries the performance difference between the two functions won’t matter. Consider the following two batches:

DROP TABLE IF EXISTS #BENCHMARK_TIME_FUNCTIONS; CREATE TABLE #BENCHMARK_TIME_FUNCTIONS (SAVED_DATETIME DATETIME2(7) NULL); INSERT INTO #BENCHMARK_TIME_FUNCTIONS WITH (TABLOCK) SELECT TOP (10000000) SYSDATETIME() FROM master..spt_values t1 CROSS JOIN master..spt_values t2 CROSS JOIN master..spt_values t3 OPTION (MAXDOP 1); GO DROP TABLE IF EXISTS #BENCHMARK_TIME_FUNCTIONS; CREATE TABLE #BENCHMARK_TIME_FUNCTIONS (SAVED_DATETIME DATETIME2(7) NULL); INSERT INTO #BENCHMARK_TIME_FUNCTIONS WITH (TABLOCK) SELECT TOP (10000000) SYSUTCDATETIME() FROM master..spt_values t1 CROSS JOIN master..spt_values t2 CROSS JOIN master..spt_values t3 OPTION (MAXDOP 1);

SQL Server doesn’t need to execute the datetime functions once per row. If it did, we’d expect a run time difference of about 7 seconds between the two queries. Instead, they execute in nearly exactly the same amount of time. I think that the details about guarantees of this behavior were found on a Connect (RIP) comment.

The performance difference can make a difference when the function is used to profile code that executes row by row. Suppose that we have related SQL statements and we want to measure the execution time of just one of the statements by calculating the time difference before and after execution. I’m not saying that this is the best way of doing this analysis, but I suspect it to be a common technique. Below is an example stored procedure with one copy using SYSDATETIME() and the other using SYSUTCDATETIME():

GO

CREATE OR ALTER PROCEDURE #USE_SUPERIOR_AMERICAN_TIME AS

BEGIN

DECLARE @start_time DATETIME2(7),

@end_time DATETIME2(7),

@total_microseconds BIGINT = 0,

@loops INT = 0;

SET NOCOUNT ON;

DROP TABLE IF EXISTS dbo.OFFICIAL_STATEMENTS;

CREATE TABLE dbo.OFFICIAL_STATEMENTS (OFFICIAL_STATEMENT VARCHAR(30));

WHILE @loops <= 50000 BEGIN INSERT INTO dbo.OFFICIAL_STATEMENTS VALUES ('NO COLLUSION');

IF @@ROWCOUNT > 0

BEGIN

SET @start_time = SYSDATETIME();

INSERT INTO dbo.OFFICIAL_STATEMENTS VALUES ('NO OBSTRUCTION');

SET @end_time = SYSDATETIME();

SET @total_microseconds = @total_microseconds + DATEDIFF(MICROSECOND, @start_time, @end_time);

END;

SET @loops = @loops + 1;

END;

SELECT @total_microseconds;

END;

GO

EXEC #USE_SUPERIOR_AMERICAN_TIME;

GO

CREATE OR ALTER PROCEDURE #USE_INFERIOR_EUROPEAN_TIME AS

BEGIN

DECLARE @start_time DATETIME2(7),

@end_time DATETIME2(7),

@total_microseconds BIGINT = 0,

@loops INT = 0;

SET NOCOUNT ON;

DROP TABLE IF EXISTS dbo.OFFICIAL_STATEMENTS;

CREATE TABLE dbo.OFFICIAL_STATEMENTS (OFFICIAL_STATEMENT VARCHAR(30));

WHILE @loops <= 50000 BEGIN INSERT INTO dbo.OFFICIAL_STATEMENTS VALUES ('NO COLLUSION');

IF @@ROWCOUNT > 0

BEGIN

SET @start_time = SYSUTCDATETIME();

INSERT INTO dbo.OFFICIAL_STATEMENTS VALUES ('NO OBSTRUCTION');

SET @end_time = SYSUTCDATETIME();

SET @total_microseconds = @total_microseconds + DATEDIFF(MICROSECOND, @start_time, @end_time);

END;

SET @loops = @loops + 1;

END;

SELECT @total_microseconds;

END;

GO

EXEC #USE_INFERIOR_EUROPEAN_TIME;

The second insert query takes a total of 4381219 microseconds with SYSDATETIME() and 4164658 microseconds with SYSUTCDATETIME(). That’s a 5% difference just from changing the datetime function. Of course, given that this code is measuring the difference of times SYSUTCDATETIME() is the correct choice because it remains accurate even if there’s a daylight savings switch during code execution. My own approach is trying to avoid running one-off stored procedures around 2:00 AM.

Final thoughts

There’s a large performance difference between SYSDATETIME() and SYSUTCDATETIME(). It isn’t necessary to guess which one is slower and why. ETW tracing tools such as PerfView can reveal exactly where the performance difference comes from. Thanks for reading!

THANK YOU!, sir!

Funny, while executing #p_local on my work laptop took a very comparable time as reported here (15s and 5s), running the same against Azure Databases takes over 3 min to complete, even for the UTC version …

And that is our … Production Azure DB. In our QA Azure DB I stopped the execution after 6 min as it was not done yet.

Wondering if this is something specific to Azure Databases or is it due to my setup (running from SSMS on my laptop against Azure DB).

Thanks, Radu