Large servers may experience a scalability bottleneck related to the RESERVED_MEMORY_ALLOCATION_EXT wait event during loading of columnstore tables. This blog post shares a reproduction of the issue and discusses some test results.

The Test Server

Testing was done on a four socket bare metal server with 24 cores per socket. The server had 1 TB of RAM and storage was provided by a SAN. Within SQL Server, we were able to read data at a peak rate of about 5.5 GB/s. Hyperthreading was disabled, but there aren’t any other nonstandard OS configuration settings that I’m aware of.

SQL Server 2016 SP1 CU7 was installed on Windows Server 2016. Most default settings were retained for this testing, including allowing auto soft-NUMA to break up the 96 schedulers into 12 groups of 8, with 3 per memory node. Max server memory was set to around 800000 MB. The user database had 24 data files, indirect checkpoints weren’t used, and the memory model in use was conventional. No interesting trace flags were enabled, except perhaps for TF 4199. I did grow max server memory to close to the maximum before starting any of the tests.

Testing Code and Method

The workload test loaded the same data from a SQL Server table into 576 CCI target tables. For my testing I used a source table of one million rows. Each session grabs a number from a sequence, loads data into the table corresponding to the sequence number, and continues to do that until there are no more tables to process. This may seem like an odd test to run, but think of it as an abstract representation of a columnstore ETL workload which loads data into partitioned CCIs.

The first step is to define the source table. I created a 50 column table that stored only 0s in all of its rows. There’s nothing particularly special about this choice other that it has a very low disk footprint as a CCI because it compresses so well. On a non-busy system it took around 7.3 seconds to insert one million rows into a CCI. Below is the table definition:

DROP TABLE IF EXISTS CCI_SOURCE; CREATE TABLE CCI_SOURCE ( ID1 SMALLINT, ID2 SMALLINT, ID3 SMALLINT, ID4 SMALLINT, ID5 SMALLINT, ID6 SMALLINT, ID7 SMALLINT, ID8 SMALLINT, ID9 SMALLINT, ID10 SMALLINT, ID11 SMALLINT, ID12 SMALLINT, ID13 SMALLINT, ID14 SMALLINT, ID15 SMALLINT, ID16 SMALLINT, ID17 SMALLINT, ID18 SMALLINT, ID19 SMALLINT, ID20 SMALLINT, ID21 SMALLINT, ID22 SMALLINT, ID23 SMALLINT, ID24 SMALLINT, ID25 SMALLINT, ID26 SMALLINT, ID27 SMALLINT, ID28 SMALLINT, ID29 SMALLINT, ID30 SMALLINT, ID31 SMALLINT, ID32 SMALLINT, ID33 SMALLINT, ID34 SMALLINT, ID35 SMALLINT, ID36 SMALLINT, ID37 SMALLINT, ID38 SMALLINT, ID39 SMALLINT, ID40 SMALLINT, ID41 SMALLINT, ID42 SMALLINT, ID43 SMALLINT, ID44 SMALLINT, ID45 SMALLINT, ID46 SMALLINT, ID47 SMALLINT, ID48 SMALLINT, ID49 SMALLINT, ID50 SMALLINT ); INSERT INTO CCI_SOURCE WITH (TABLOCK) SELECT ID,ID,ID,ID,ID,ID,ID,ID,ID,ID ,ID,ID,ID,ID,ID,ID,ID,ID,ID,ID ,ID,ID,ID,ID,ID,ID,ID,ID,ID,ID ,ID,ID,ID,ID,ID,ID,ID,ID,ID,ID ,ID,ID,ID,ID,ID,ID,ID,ID,ID,ID FROM ( SELECT TOP (1000000) 0 ID FROM master..spt_values t1 CROSS JOIN master..spt_values t2 ) t;

Next we need a stored procedure that can save off previous test results (as desired) and reset the server for the next test. If a test name is passed in then results are saved to CCI_TEST_RESULTS and CCI_TEST_WAIT_STATS from the previous run. The procedure always recreates all of the target CCI tables, resets the sequence, clears the buffer pool, and does a few other things.

CREATE OR ALTER PROCEDURE [dbo].[CCI_TEST_RESET] (@PreviousTestName NVARCHAR(100) = NULL, @DebugMe INT = 0)

AS

BEGIN

DECLARE

@tablename SYSNAME,

@table_number INT = 1,

@SQLToExecute NVARCHAR(4000);

/*

CREATE TABLE CCI_TEST_WAIT_STATS (

TEST_NAME NVARCHAR(100),

WAIT_TYPE NVARCHAR(60),

WAITING_TASKS_COUNT BIGINT,

WAIT_TIME_MS BIGINT,

MAX_WAIT_TIME_MS BIGINT,

SIGNAL_WAIT_TIME_MS BIGINT

);

CREATE TABLE CCI_TEST_RESULTS (

TEST_NAME NVARCHAR(100),

TOTAL_SESSION_COUNT SMALLINT,

TEST_DURATION INT,

TOTAL_WORK_TIME INT,

BEST_TABLE_TIME INT,

WORST_TABLE_TIME INT,

TOTAL_TABLES_PROCESSED SMALLINT,

MIN_TABLES_PROCESSED SMALLINT,

MAX_TABLES_PROCESSED SMALLINT

);

*/

SET NOCOUNT ON;

IF @DebugMe = 0

BEGIN

DROP SEQUENCE IF EXISTS CCI_PARALLEL_TEST_SEQ;

CREATE SEQUENCE CCI_PARALLEL_TEST_SEQ

AS SMALLINT

START WITH 1

INCREMENT BY 1

CACHE 600;

IF @PreviousTestName N''

BEGIN

INSERT INTO CCI_TEST_RESULTS WITH (TABLOCK)

SELECT @PreviousTestName,

COUNT(*) TOTAL_SESSION_COUNT

, DATEDIFF(MILLISECOND, MIN(MIN_START_TIME), MAX(MAX_END_TIME)) TEST_DURATION

, SUM(TOTAL_SESSION_TIME) TOTAL_WORK_TIME

, MIN(MIN_TABLE_TIME) BEST_TABLE_TIME

, MAX(MAX_TABLE_TABLE) WORST_TABLE_TIME

, SUM(CNT) TOTAL_TABLES_PROCESSED

, MIN(CNT) MIN_TABLES_PROCESSED

, MAX(CNT) MAX_TABLES_PROCESSED

FROM (

SELECT

SESSION_ID

, COUNT(*) CNT

, SUM(DATEDIFF(MILLISECOND, START_TIME, END_TIME)) TOTAL_SESSION_TIME

, MIN(DATEDIFF(MILLISECOND, START_TIME, END_TIME)) MIN_TABLE_TIME

, MAX(DATEDIFF(MILLISECOND, START_TIME, END_TIME)) MAX_TABLE_TABLE

, MIN(START_TIME) MIN_START_TIME

, MAX(END_TIME) MAX_END_TIME

FROM CCI_TEST_LOGGING_TABLE

GROUP BY SESSION_ID

) t;

INSERT INTO CCI_TEST_WAIT_STATS WITH (TABLOCK)

SELECT @PreviousTestName, * FROM sys.dm_os_wait_stats

WHERE wait_type IN (

'RESERVED_MEMORY_ALLOCATION_EXT'

, 'SOS_SCHEDULER_YIELD'

, 'PAGEIOLATCH_EX'

, 'MEMORY_ALLOCATION_EXT'

, 'PAGELATCH_UP'

, 'PAGEIOLATCH_SH'

, 'WRITELOG'

, 'LATCH_EX'

, 'PAGELATCH_EX'

, 'PAGELATCH_SH'

, 'CMEMTHREAD'

, 'LATCH_SH'

);

END;

DROP TABLE IF EXISTS CCI_TEST_LOGGING_TABLE;

CREATE TABLE CCI_TEST_LOGGING_TABLE (

SESSION_ID INT,

TABLE_NUMBER INT,

START_TIME DATETIME,

END_TIME DATETIME

);

END;

WHILE @table_number BETWEEN 0 AND 576

BEGIN

SET @tablename = N'CCI_PARALLEL_RPT_TARGET_' + CAST(@table_number AS NVARCHAR(3));

SET @SQLToExecute= N'DROP TABLE IF EXISTS ' + QUOTENAME(@tablename);

IF @DebugMe = 1

BEGIN

PRINT @SQLToExecute;

END

ELSE

BEGIN

EXEC (@SQLToExecute);

END;

SET @SQLToExecute = N'SELECT * INTO ' + QUOTENAME(@tablename) +

' FROM CCI_SOURCE WHERE 1 = 0';

IF @DebugMe = 1

BEGIN

PRINT @SQLToExecute;

END

ELSE

BEGIN

EXEC (@SQLToExecute);

END;

SET @SQLToExecute = N'CREATE CLUSTERED COLUMNSTORE INDEX CCI ON ' + QUOTENAME(@tablename);

IF @DebugMe = 1

BEGIN

PRINT @SQLToExecute;

END

ELSE

BEGIN

EXEC (@SQLToExecute);

END;

SET @table_number = @table_number + 1;

END;

IF @DebugMe = 0

BEGIN

DBCC DROPCLEANBUFFERS WITH NO_INFOMSGS;

DBCC FREEPROCCACHE WITH NO_INFOMSGS;

DBCC FREESYSTEMCACHE('ALL');

SELECT COUNT(*) FROM CCI_SOURCE; -- read into cache

DBCC SQLPERF ("sys.dm_os_wait_stats", CLEAR) WITH NO_INFOMSGS;

DBCC SQLPERF ("sys.dm_os_spinlock_stats", CLEAR) WITH NO_INFOMSGS;

END;

END;

Finally we need a stored procedure that can be called to do as many CCI inserts as it can as quickly as possible. I used the following code:

CREATE OR ALTER PROCEDURE [dbo].[CCI_RUN_INSERTS] (@DebugMe INT = 0) AS BEGIN DECLARE @table_number INT, @tablename SYSNAME, @SQLToExecute NVARCHAR(4000), @start_loop_time DATETIME; SET NOCOUNT ON; SELECT @table_number = NEXT VALUE FOR CCI_PARALLEL_TEST_SEQ; WHILE @table_number BETWEEN 0 AND 576 BEGIN SET @start_loop_time = GETDATE(); SET @tablename = N'CCI_PARALLEL_RPT_TARGET_' + CAST(@table_number AS NVARCHAR(3)); SET @SQLToExecute= N' INSERT INTO ' + QUOTENAME(@tablename) + N' WITH (TABLOCK) SELECT * FROM CCI_SOURCE WITH (TABLOCK) OPTION (MAXDOP 1)'; IF @DebugMe = 1 BEGIN PRINT @SQLToExecute; END ELSE BEGIN EXEC (@SQLToExecute); INSERT INTO CCI_TEST_LOGGING_TABLE VALUES (@@SPID, @table_number, @start_loop_time, GETDATE()); END; SELECT @table_number = NEXT VALUE FOR CCI_PARALLEL_TEST_SEQ; END; END;

To vary the number of concurrent queries I used sqlcmd. Each set of 12 sessions had the following format:

START /B sqlcmd -d your_db_name -S your_server_name -Q "EXEC dbo.[CCI_RUN_INSERTS]" > nul

START /B sqlcmd -d your_db_name -S your_server_name -Q "EXEC dbo.[CCI_RUN_INSERTS]" > nul

START /B sqlcmd -d your_db_name -S your_server_name -Q "EXEC dbo.[CCI_RUN_INSERTS]" > nul

START /B sqlcmd -d your_db_name -S your_server_name -Q "EXEC dbo.[CCI_RUN_INSERTS]" > nul

START /B sqlcmd -d your_db_name -S your_server_name -Q "EXEC dbo.[CCI_RUN_INSERTS]" > nul

START /B sqlcmd -d your_db_name -S your_server_name -Q "EXEC dbo.[CCI_RUN_INSERTS]" > nul

START /B sqlcmd -d your_db_name -S your_server_name -Q "EXEC dbo.[CCI_RUN_INSERTS]" > nul

START /B sqlcmd -d your_db_name -S your_server_name -Q "EXEC dbo.[CCI_RUN_INSERTS]" > nul

START /B sqlcmd -d your_db_name -S your_server_name -Q "EXEC dbo.[CCI_RUN_INSERTS]" > nul

START /B sqlcmd -d your_db_name -S your_server_name -Q "EXEC dbo.[CCI_RUN_INSERTS]" > nul

START /B sqlcmd -d your_db_name -S your_server_name -Q "EXEC dbo.[CCI_RUN_INSERTS]" > nul

ping 192.2.0.1 -n 1 -w 1 > nul

My workflow was to perform this test was to add the desired number of calls to the stored procedure in the .bat file, run the test, and to check the results to make sure that the test was a good one. If it was I saved off results using the reset procedure. If not I ran the reset procedure and tried again.

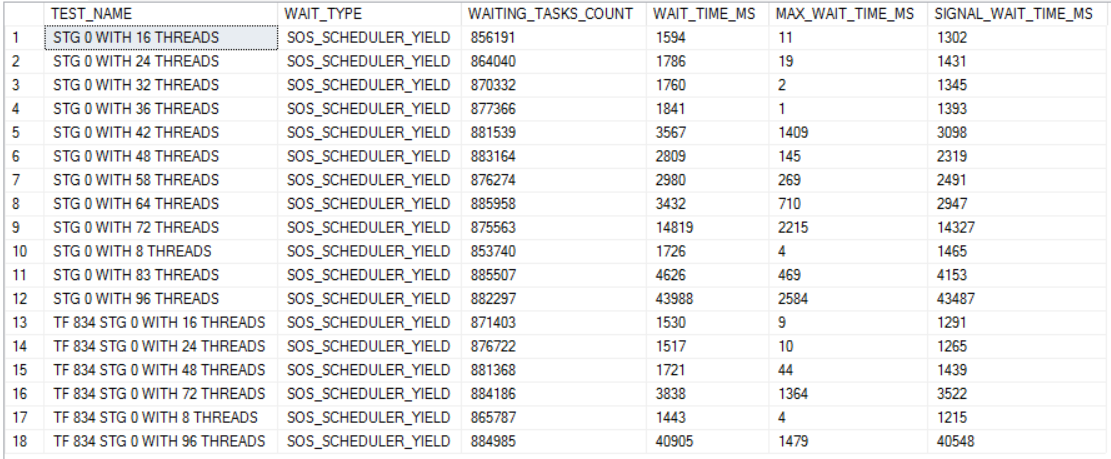

The ping command is there to add a small delay between sets of queries. I found that adding such a delay led to less doubling up on schedulers. I picked 12 because that's the number of soft-NUMA nodes. Sometimes tests would get quite a bit of SOS_SCHEDULER_YIELD waits which would mean that they couldn't be accurately compared to other tests. My fix was to just run the test again. It required a bit of patience but I never had to run a test more than once. SOS waits weren't eliminated but I'd say they fell to acceptable levels:

The right way to avoid SOS waits for testing like this (which might require every user session to go on its own scheduler) would be to set up 96 manual soft-NUMA nodes. But who has time for that?

Test Results

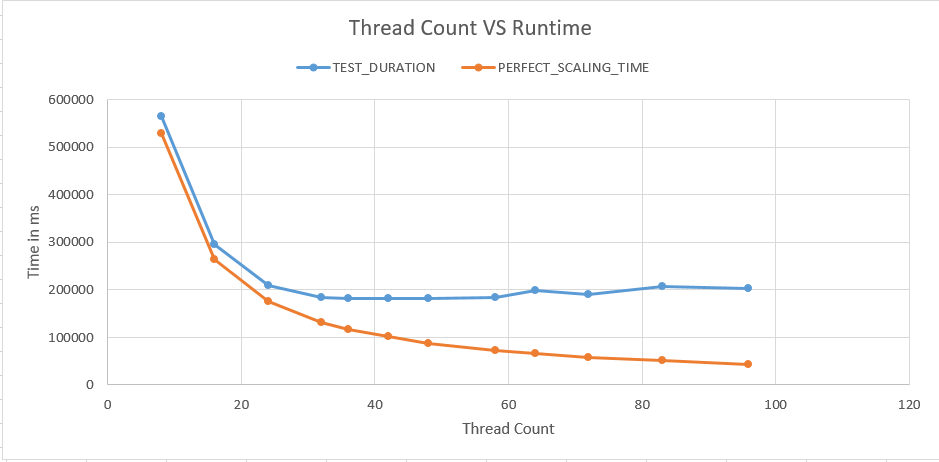

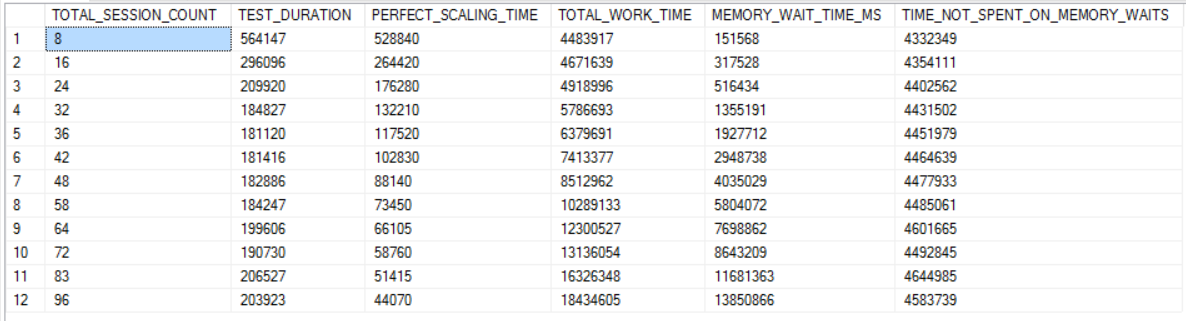

12 tests with different numbers of active queries were run. I picked numbers that somewhat evenly divided into 576 to try to keep work balanced between threads. The blue line in the chart below measures how long each test took from start to finish and the orange line represents how fast the test could have completed if there was no contention on the server whatsoever:

Naturally we can't expect the two lines to match perfectly, but improvements in runtime stop after going past 32 threads. In fact, the workload takes longer with 96 threads compared to 32 threads.

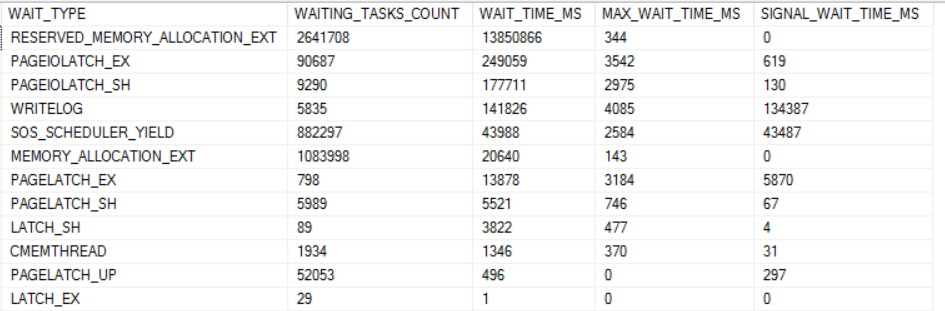

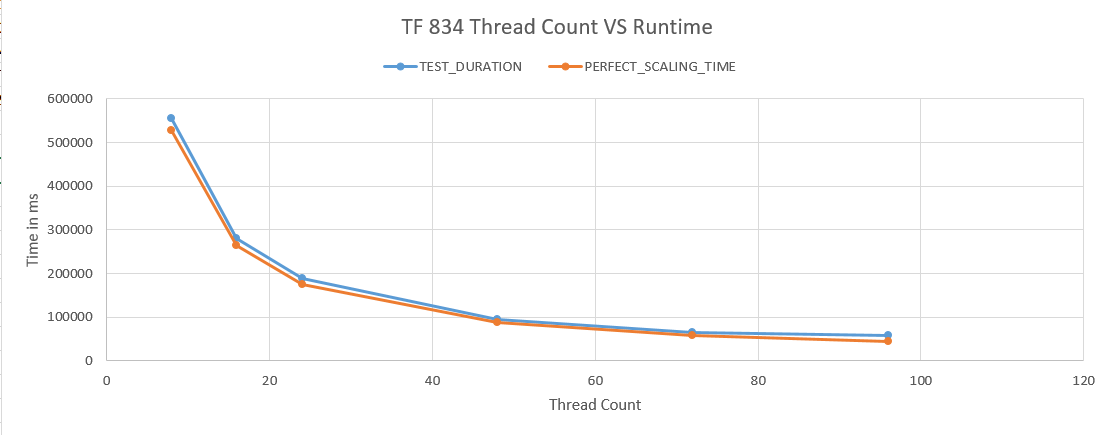

The dominant wait event for the higher thread count runs is RESERVED_MEMORY_ALLOCATION_EXT. Below is a chart of all of the wait events worth mentioning for the 96 thread run:

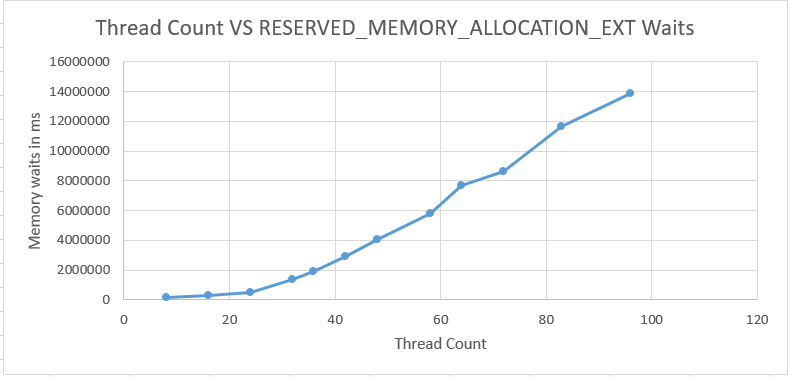

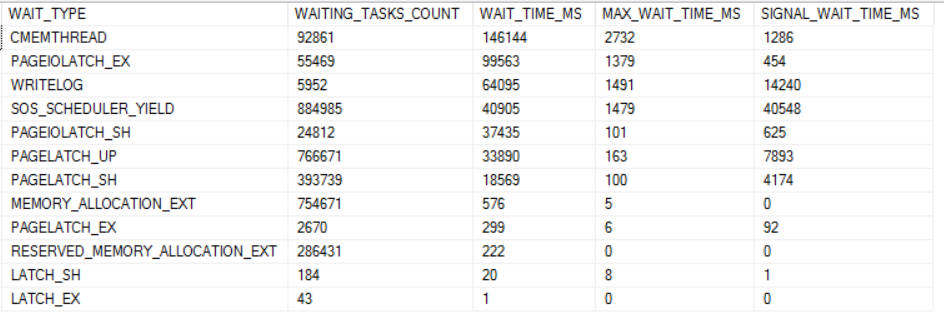

The total number of wait events for RESERVED_MEMORY_ALLOCATION_EXT is very consistent between runs. However, the average wait time significantly increases as the number of concurrent queries increases:

In fact, it could be said that that nearly all worker time past 32 threads is spent waiting on memory. The final column in the chart below is the total time spent in SQL Server minus wait time for RESERVED_MEMORY_ALLOCATION_EXT. The values in that column are remarkably consistent.

In my experience, when we get into a situation with high memory waits caused by too much concurrent CCI activity all queries on the server that use a memory grant can be affected. For example, I've seen sp_whoisactive run for longer than 90 seconds.

It needs to be stated that not all CCIs will suffer from this scalability problem. I was able to achieve good scalability with some artificial tables, but all of the real target tables that I tested have excessive memory waits at high concurrency. Perhaps tables which require more CPU to compress naturally spread out their memory requests and the underlying OS is better able to keep up.

Test Results With Trace Flag 834

Microsoft strongly recommends against using trace flag 834 with columnstore tables. There's even an article dedicated to that warning. I was desperate so I tried it anyway. The difference was night and day:

Enabling trace flag 834 does at least three things: SQL Server grows to max server memory on startup, AWE memory is used for memory access, and large pages are used for the buffer pool. I didn't see gains when using LPIM (which uses AWE memory) or by growing memory to max before running tests with a conventional memory model, so I suspect that the large pages are making a key difference. Wait time for RESERVED_MEMORY_ALLOCATION_EXT is under a second for all tests.

Scalability is diminished a bit for the 96 thread run. There are some wait events that creep up:

All of the wait events can be troubleshooted in conventional ways except possibly for CMEMTHREAD. There's no longer a large amount of time spent outside of SQL Server in the OS.

Other Workarounds

Without trace flag 834 this can be a difficult problem to work around. The two main strategies are to spread out CCI insert activity as much as possible during the ETL and to reduce memory usage of queries which run at the same time as the CCI inserts. For example, consider a query that inserts into a CCI that also performs a large hash join. If that hash join can be moved to somewhere else in the process then you might come out ahead in reducing contention on memory.

Other than that, there's some evidence that virtualized servers are not a good fit for this type of workload. Large virtual guests experience the memory waits at an increased rate, but it isn't yet clear if the problem can be avoided through some change in VM configuration.

Final Thoughts

It's hard not to conclude that TF 834 is necessary to get scalability for columnstore ETLs on very large servers. Hopefully Microsoft will make TF 834 compatible with columnstore one day in the future.

Thanks for reading!

Going Further

If this is the kind of SQL Server stuff you love learning about, you'll love my training. I'm offering a 75% discount to my blog readers if you click from here. I'm also available for consulting if you just don't have time for that and need to solve performance problems quickly.

One thought on “Large Clustered Columnstore Index ETLs Cannot Scale Without TF 834 In SQL Server”

Comments are closed.