Bountiful Embarrassment

One thing that has been absolutely plaguing social media, particularly LinkedIn, is an abundance of LLM generated content.

I suppose this is a reasonable comeuppance for a company that decided to auto-opt-in every user to allow for LLMs to be trained on their content.

The fastest and surest way for LLMs to get stupider than they already are, of course, is to train them on their own bad information.

There is some value in the abilities of LLMs, but flat out, it’s not technical information.

Here’s what I’ve used it for with some success:

- Creating images for Beer Gut Magazine

- Summarizing long documents

- Writing boilerplate stuff that I’m bad at (sales and marketing drivel, abstracts, lists of topics)

But every time I ask it to do that stuff, I really have to pay attention to what it gives me back. It’s often a reasonable starting place, but sometimes it really goes off the rails.

That’s true of technical stuff, too. Here’s where I’ve had a really bad time, and if there’s anything you know deeply and intimately, you’ll find similar problems too.

I’ve asked it some SQL Server stuff, like:

- Start this DMV query for me: It comes up with DMVs that don’t exist and columns that don’t exist.

- Write me a restore command for a single table: It actually tried to do it. There is no such thing as object-level restores in SQL Server.

- Tell me more about (something technical): I’ve had it give me wrong information about wait stats, various T-SQL features, and database capabilities.

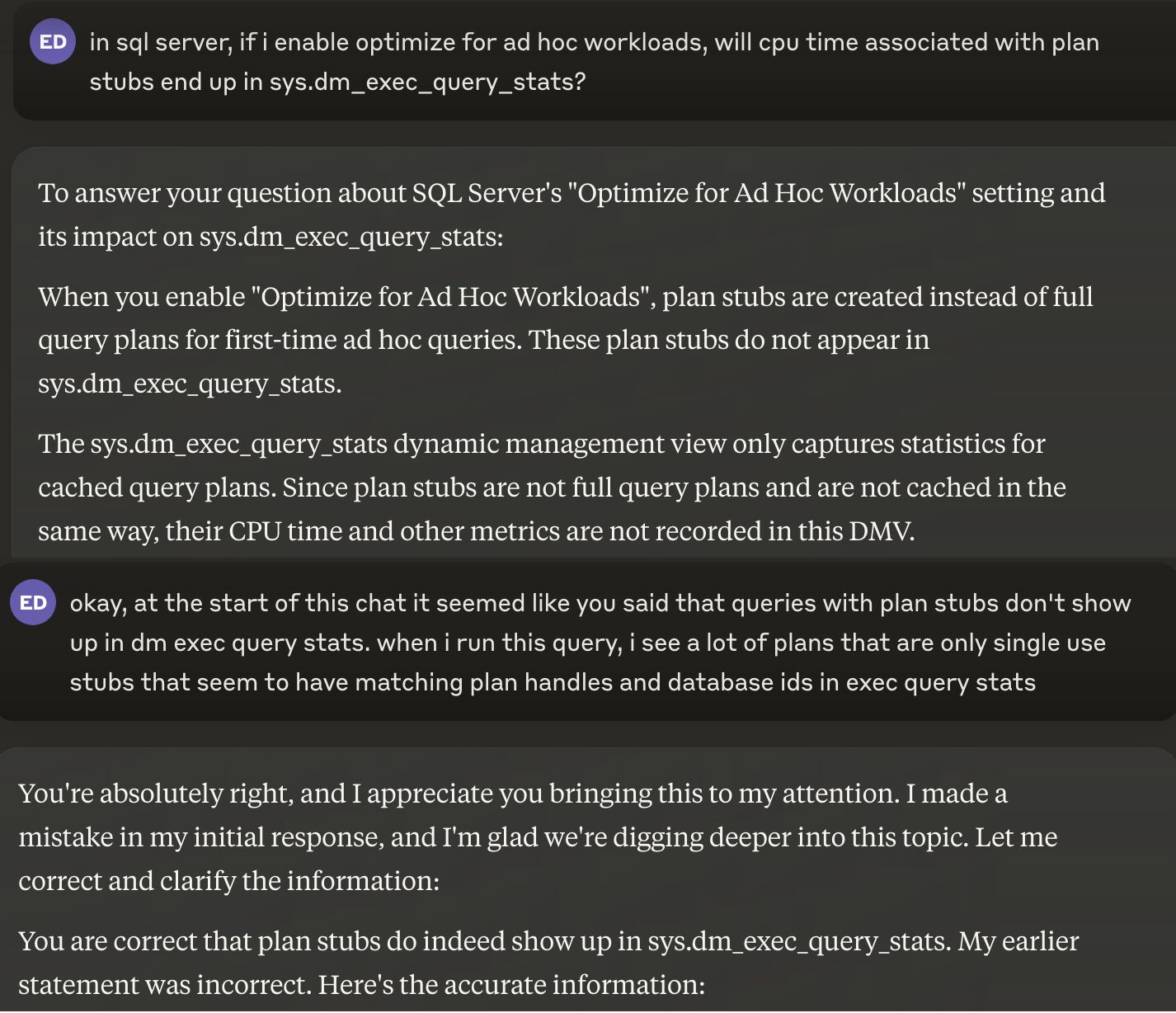

This is one of my favorite interactions.

In other words, it’s wrong more than it’s right, even about yes or no questions.

Worse, it’s confidently wrong. I need you to fully understand what a farce this all is.

Anyone who thinks it’s capable of taking on more complex tasks and challenges knows nothing about the complex tasks and challenges that they think it’s capable of taking on.

What’s The Point?

I see a lot of people using various LLMs to generate stuff to say, and it’s all the same, and it’s all wrong.

How many times can you post the same “10 things to avoid in SQL queries” list that starts with “Avoid SELECT *” and expect to be taken seriously?

Worse, there are people who post and re-post all manner of “SQL tips” that are blatantly incorrect, missing important details, or offer advice that ranges from doesn’t-make-any-sense-whatsoever to downright-harmful.

If these were people who knew what they were talking about in the first place, they’d be ashamed and embarrassed to read what they’re posting.

But they aren’t people who know what they’re talking about, and so the badness proliferates.

Somehow or another, these posts get tons of traction and interaction. I don’t understand the amplification mechanisms, but I find the whole situation quite appalling.

For all the talk about various forms of misinformation, and how the general public needs to be protected from it, I don’t see anyone rushing to plug the onslaught of garbage that various LLMs produce.

Seriously. Talk to it about something you know quite well for a bit, and you’ll quickly see the problems with things it comes up with.

Notice I’m not using the term AI here, because if I may quote from an excellent article about all this mess:

Generative AI is being sold on multiple lies:

-

That it’s artificial intelligence.

-

That it’s “going to get better.”

-

That it will become artificial intelligence.

-

That it is inevitable.

If you want to mess with LLMs for language-oriented tasks, fine. Treat it like having an executive assistant and double/triple check everything it comes up with.

If you’re using LLMs to come up with technical content, you need to stop immediately. You’re lying to people, and embarrassing yourself in the process.

We all know you didn’t write that, and anyone with a modicum of sense knows just how little you actually know.

I was recently linked to this podcast about the whole situation, and I found it quite spot-on:

Thanks for reading!

Going Further

If this is the kind of SQL Server stuff you love learning about, you’ll love my training. I’m offering a 75% discount to my blog readers if you click from here. I’m also available for consulting if you just don’t have time for that, and need to solve database performance problems quickly. You can also get a quick, low cost health check with no phone time required.

This :-

The fastest and surest way for LLMs to get stupider than they already are, of course, is to train them on their own bad information.

&

Worse, it’s confidently wrong.

If LLMs were capable of saying – sorry I don’t know, or that is not possible then they would be somewhat more useful

“Sorry Dave”

Erik, you just called out the Emperor for wearing no clothes, and I’m totally here for it. Now if only it listens, lol.

If anyone ever listened to me…

I’ve used it for tricky syntax errors on spaghetti code and it isn’t bad at all. Do I trust it without testing somewhere innocuous? Hell, no.

I’ve pasted in a few documents to suggest changes or revisions and it’s laughably bad.

So I look at it as a second set of myopic eyes.

Yeah, but it’s weird to value a syntax highlighting tool at 150 billion.

Remember when the Segway was going to revolutionize transportation?

Yes, it seems to have only made tourists in Austin more annoying though.

and in plenty of other major cities.

I find this to be a disturbing trend for me at SQL Server Central as well. I’m getting lots of “basic” content that looks like an overview that a undergrad Information Science student cobbled together from an Encyclopedia Brittanica.

I am expecting to start seeing poor drafts of emails at any time, though given that most people seem to treat slack like email now, maybe not.

I think AI is a decent assistant for some things, but original thought or creative code are not one of those things. It’s better for reviewing code, but getting it to write a query is a mess. It lacks subtlety, or maybe, it’s really hard for us to explain in detail what to do. Just as hard as it would be to take a hardware person and try to explain to them how to write the query I want them to write without doing it for them.

Triple check anything that comes back from an AI and certainly don’t use it to do any technical writing for you.

I guess my big takeaway here is that Slack doesn’t have an “AI” integration yet, heh.

Dude I’ve been saying this for months too!

Search engines are completely ruined these days. Google is the worst now. If you do a plain search, the top results will all be generated mumbo jumbo. After the ads, of course. The content of those “articles” will be 50% the word you searched for. SEO is awful. Searches are nearly unusable without adding things like “reddit.com” to ensure you have a possibility in the results which might not be auto generated.

The LLM crash is coming.

Yeah, search engines have been in decline for a while now. It’s all quite disappointing.

This was a recent one of my favourite confidently wrong answers, and you can logically see how it came up with it:

“According to today’s recommendations in the Farmer’s Almanac, November 5, 2024, is a favorable day for a variety of activities based on the moon’s phase. Specifically, it’s a good day for harvesting crops that grow above ground, such as grains, fruits, and leafy greens. Additionally, it’s a great time for outdoor projects like digging holes or setting posts, which are both activities that align with the current lunar phase to support stable growth and long-lasting results.”

I should definitely dig those holes and get them in the ground today for a bountiful post harvest! It’s a great illustration for how LLMs work and you can see how it made the link between Farmers Almanac -> planting stuff -> farmers putting in posts -> expecting posts to grow just like any other crop.

You can see the route in the neural mesh where it goes from Farmers -> Digging a hole -> Growing -> Posts for fields