ErikGPT

I’m going to be totally open and honest with you, dear reader: I’ve been experimenting with… AI.

See, I’m just a lonely independent consultant, and sometimes it’s just nice to have someone to talk to. It’s also kind of fun to take a query idea you have, and ask “someone” else to write it to see what they’d come up with.

ChatGPT (for reference, 4 and 4o) does a rather okay job sometimes. In fact, when I ask it to write a query, it usually comes up with a query that looks a lot like the ones that I have to fix when I’m working with clients.

If I poke and prod it enough about the things that it has done wrongly, it will agree with me and do things the right way, eventually. That is an improvement over your average T-SQL developer.

Your average T-SQL developer will spend a terrible amount of time trying to figure out ways to write queries incorrectly, even when you show them the right way to do something, often under the assumption that they’ve found the one time it’s okay to do it wrong.

For this post, I came up with a query idea, wrote a query that did what I wanted, and then asked the AI to write its own version.

It came pretty close in general, and even added in a little touch that I liked and hadn’t thought of.

Duplicate Post Finder

Here’s the query I wrote, combined with the nice touch that ChatGPT added.

WITH

DuplicateTitles AS

(

SELECT

Title,

EarliestPostId = MIN(p.Id),

FirstPostDate = MIN(p.CreationDate),

LastPostDate = MAX(p.CreationDate),

DuplicatePostIds =

STRING_AGG

(CONVERT(varchar(MAX), p.Id), ', ')

WITHIN GROUP

(ORDER BY p.Id),

TotalDupeScore = SUM(p.Score),

DuplicateCount = COUNT_BIG(*) - 1

FROM dbo.Posts AS p

WHERE p.PostTypeId = 1

GROUP BY

p.Title

HAVING

COUNT_BIG(*) > 1

)

SELECT

dt.Title,

dt.FirstPostDate,

dt.LastPostDate,

dt.DuplicatePostIds,

dt.DuplicateCount,

TotalDupeScore =

dt.TotalDupeScore - p.Score

FROM DuplicateTitles dt

JOIN dbo.Posts p

ON dt.EarliestPostId = p.Id

AND p.PostTypeId = 1

ORDER BY

dt.DuplicateCount DESC,

TotalDupeScore DESC;

If you’re wondering what the nice touch is, it’s the - 1 in DuplicateCount = COUNT_BIG(*) - 1, and I totally didn’t think of doing that, even though it makes total sense.

So, good job there.

Let’s Talk About Tuning

To start, I added this index. Some of these columns could definitely be moved to the includes, but I wanted to see how having as many of the aggregation columns in the key of the index would help with sorting that data.

Those datums? These datas? I think one of those is right, probably.

CREATE INDEX

p

ON dbo.Posts

(PostTypeId, Title, CreationDate, Score)

WITH

(SORT_IN_TEMPDB = ON, DATA_COMPRESSION = PAGE);

It leads with PostTypeId, since that’s the only column we’re filtering on to find questions, which are the only things that can have titles.

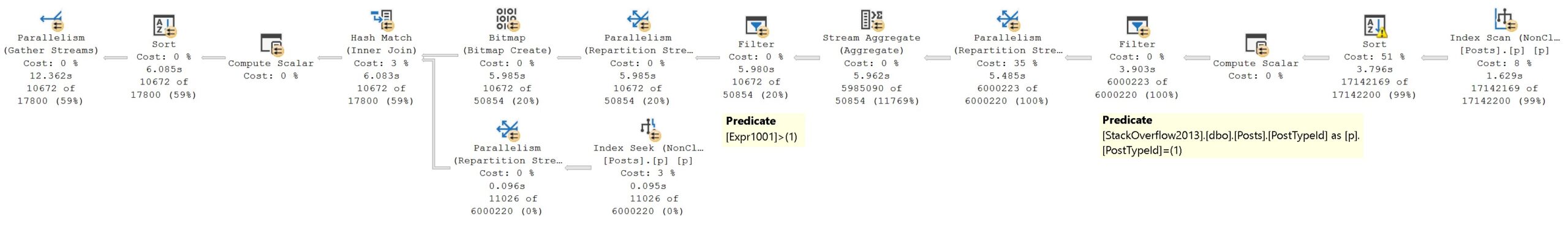

But SQL Server’s cost-based optimizer makes a very odd choice here. Let’s look at that there query plan.

There’s one expected Filter in the query plan, for the COUNT_BIG(*) > 1 predicate, which makes absolute sense. We don’t know what the count will be ahead of time, so we have to calculate and filter it on the fly.

The one that is entirely unexpected is for PostTypeId = 1, because WE HAVE AN INDEX THAT LEADS WITH POSTTYPEID.

¿Por que las hamburguesas, SQL Server?

Costing vs. Limitations

I’ve written in the past about, quite literally not figuratively, how Max Data Type Columns And Predicates Aren’t SARGable.

My first thought was that that, since we’re doing this: (CONVERT(varchar(MAX), p.Id), ', '), that the compute scalar right before the filter was preventing the predicate on PostTypeId from being pushed into an index seek.

Keep in mind that this is quite often necessary when using STRING_AGG, because the implementation is pretty half-assed even by Microsoft standards. And unfortunately, the summer intern who worked on it has since moved on to be a Senior Vice President elsewhere in the organization.

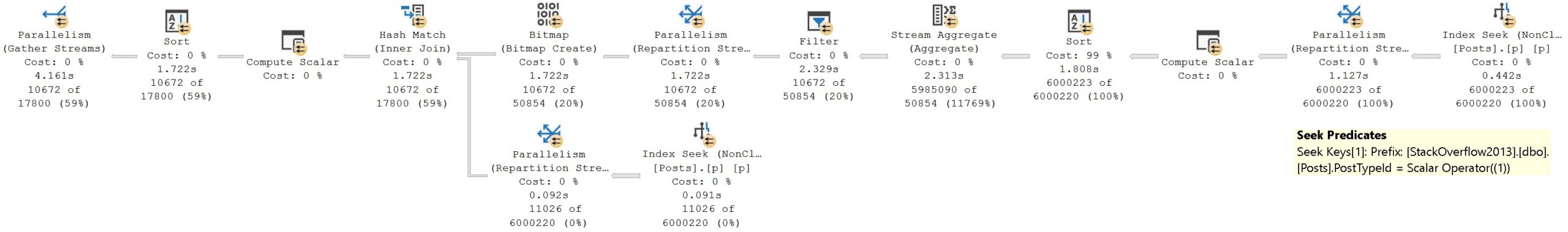

At first I experimented with using smaller byte lengths in the convert. And yeah, somewhere in the 500-600 range, the plan would change to an index seek. But this wasn’t reliable. Different stats samplings and compatibility levels would leave me with different plans (switching between a seek and a scan). The only thing that worked reliably is using a FORCESEEK hint to override the optimizer’s mishandling.

This changes the plan to something quite agreeable, that no longer takes 12 seconds.

So why the decision to use the first plan, au naturale, instead of the plan that took me forcing things to seek?

- 12 second plan: 706 query bucks

- 4 second plan: 8,549 query bucks

The faster plan was estimated to cost nearly 10x the query bucks to execute. Go figure.

For anyone who needed a reminder:

- High cost doesn’t mean slow

- Low cost doesn’t mean fast

- All costs are estimates, with no bearing on the reality of query execution

Thanks for reading!

Going Further

If this is the kind of SQL Server stuff you love learning about, you’ll love my training. I’m offering a 75% discount to my blog readers if you click from here. I’m also available for consulting if you just don’t have time for that, and need to solve database performance problems quickly. You can also get a quick, low cost health check with no phone time required.

Ooo, the count -1 idea is cute! I love AI for stuff like this.

“using the Stack Overflow schema…” can get a lot of weird queries started for you, hahaha.