Max For The Minimum

In an earlier post, we talked about how strings change the way SQL Server’s optimizer gives memory to queries under certain conditions. In that post, I talked about why MAX datatypes have the MAX problems.

In this post we’re going to look at a couple other issues with them:

- You can’t put them in the key of an index

- You can’t push predicated down to them

I know what you’re thinking, here. You’d never do that; you’re much smarter. But someday you might have to explain to someone all the reasons why they shouldn’t do that, and you might want some more in-depth reasons other than “it’s bad”.

Trust me, I have to explain this to people all the time, and I wish I had a few great resources for it.

Like these posts, I guess.

Maxamonium

First, we have have this Very Useful™ query.

SELECT c = COUNT_BIG(*) FROM dbo.Posts AS P WHERE P.Body LIKE N'SQL%';

The plan stinks and it’s slow as all get out, so we try to create an index.

CREATE INDEX not_gonna_happen

ON dbo.Posts(Body);

But SQL Server denies us, because the Body column is nvarchar(max).

Msg 1919, Level 16, State 1, Line 7 Column 'Body' in table 'dbo.Posts' is of a type that is invalid for use as a key column in an index.

Second Chances

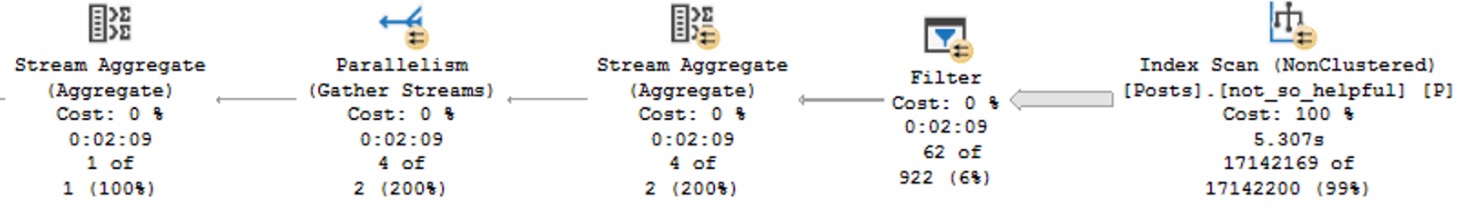

Our backup idea is to create this index, which still won’t make things much better:

CREATE INDEX not_so_helpful

ON dbo.Posts(Id) INCLUDE(Body);

MAX columns can be in the includes list, but includes aren’t very effective for searching, unless they’re part of a filtered index. Since we don’t know what people will search for, we can’t create an explicit filter on the index either.

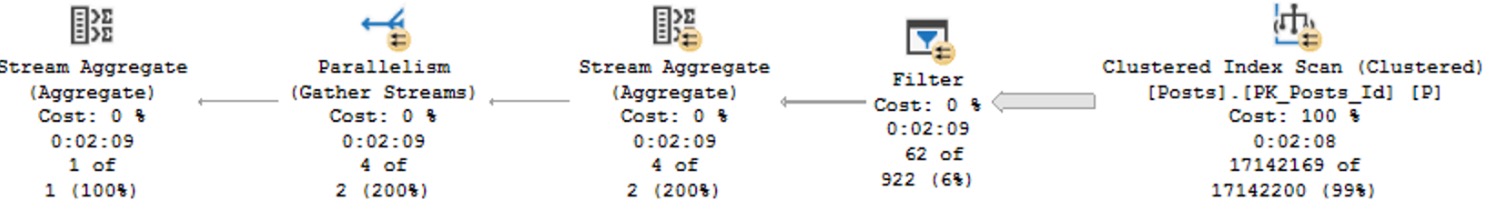

Even with a smaller index to read from, we spend a full two minutes filtering data out, because searching for N'SQL%' in our where clause can’t be pushed to when we scan the index.

And Sensibility

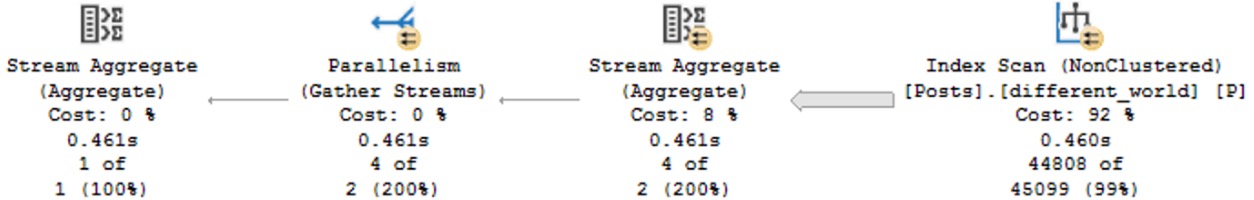

Let’s contrast that with a similar index and search of a column that’s only nvarchar(150). Sure, it’s not gonna find the same things. I just want you to see the difference in the query plan and time when we’re not hitting a (max) column.

This isn’t gonna help you if you genuinely do need to store data up to ~2GB in size in a single column, but it might help people who used a max length “just to be safe”.

CREATE INDEX different_world

ON dbo.Posts(Id) INCLUDE(Title);

SELECT c = COUNT_BIG(*) FROM dbo.Posts AS P WHERE P.Title LIKE N'SQL%';

But if you fumbled around and found out, you might be able to downsize your columns to a byte length that actually fits the data, and do a lot better performance-wise. This search only takes about 460 milliseconds, even if we scan the entire index.

You may not like it, but this is what better performance looks like.

Going Further

If this is the kind of SQL Server stuff you love learning about, you’ll love my training. I’m offering a 75% discount to my blog readers if you click from here. I’m also available for consulting if you just don’t have time for that and need to solve performance problems quickly.

Related Posts

- Software Vendor Mistakes With SQL Server: Indexing #Temp Tables Incorrectly

- Software Vendor Mistakes With SQL Server: Thinking Index Rebuilds Solve Every Problem

- Software Vendor Mistakes With SQL Server: Lowering Fill Factor For Every Index

- Software Vendor Mistakes With SQL Server: Not Using Filtered Indexes Or Indexed Views