101ers

This is still one of the most common problems I see in queries.

People are terrified of NULLs. People are afraid to merge on freeways in Los Angeles.

What results is this endless stream of poorly performing queries, and some surprise logic bugs along the way.

I don’t have much more else of an intro. The TL;DR is that you should use natural expressions like IS NULL or IS NOT NULL, rather than any of the built in functions available to you in SQL Server, like ISNULL, COALESCE, et al. which are presentation layer functions with no relational meaning whatsoever.

From here on out, we’ll be calling them unnatural expressions. Perhaps that will get through to you.

Tuning Wizard

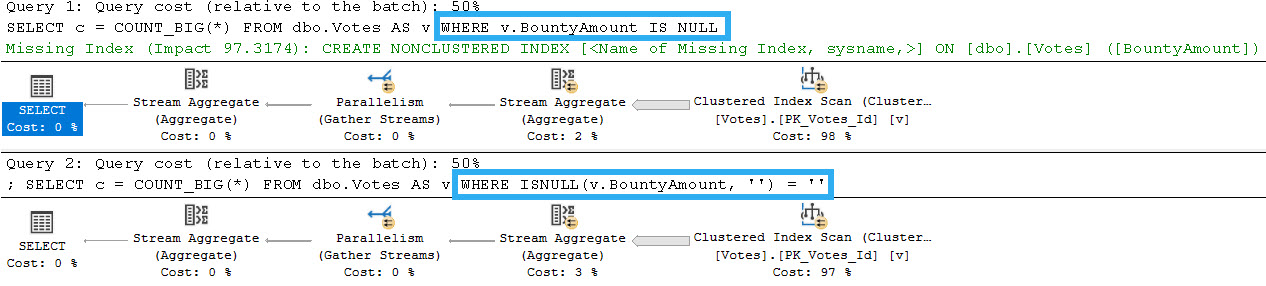

First is something I’ve covered before, but when you use unnatural expressions, the optimizer will not give you feedback about useful indexes.

The first query generates a missing index request, the second one does not. The optimizer has abandoned all hope with the use of an unnatural expression.

Lethargy

The other issue with unnatural expressions comes down to implicit conversion.

Take this, for instance.

DECLARE

@i int = 0;

SELECT

c =

CASE ISNULL(@i, '')

WHEN ''

THEN 1

ELSE 0

END;

This will return a 1, because 0 and ” can be implicitly converted.

Perhaps less obvious, and more rare, is this:

DECLARE

@d datetime = '19000101';

SELECT

c =

CASE ISNULL(@d, '')

WHEN ''

THEN 1

ELSE 0

END;

Which will also return 1.

Not many databases have stuff going back to 1900, but I do see people using that as a canary value often enough.

Perfidy

If that’s not enough to get you off the idea, let’s look at how this stuff plays out in the real world.

First, let’s get ourselves an index. Without that, there’s fundamentally no difference in performance.

CREATE INDEX v ON dbo.Votes

(BountyAmount);

Our gold standard will be these two queries:

SELECT

c = COUNT_BIG(*)

FROM dbo.Votes AS v

WHERE v.BountyAmount IS NULL;

SELECT

c = COUNT_BIG(*)

FROM dbo.Votes AS v

WHERE v.BountyAmount IS NOT NULL;

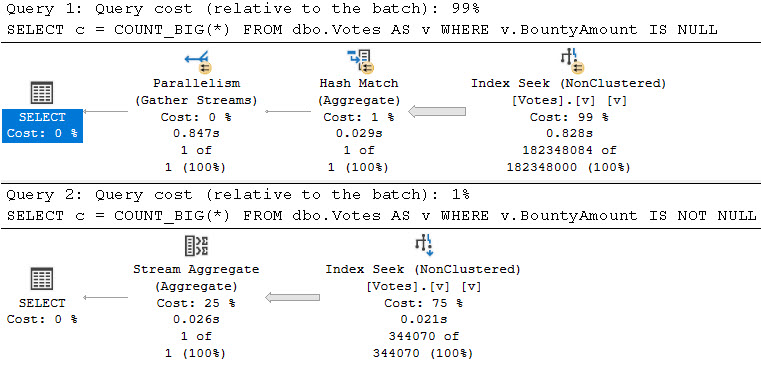

The first one that checks for NULL values returns a count of 182,348,084.

The second one that checks for NOT NULL values returns a count of 344,070.

Keep those in mind!

The query plans for them both look like this:

Which run, respectively (and respectably), in 846ms and 26ms. Obviously the query with the more selective predicate will have a time advantage, here.

Wrongly

Here’s where things start to go wrong.

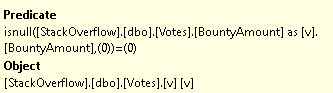

This query returns incorrect results, but you’re probably used to that because of all the NOLOCK hints in your queries anyway.

SELECT

c = COUNT_BIG(*)

FROM dbo.Votes AS v

WHERE ISNULL(v.BountyAmount, '') = '';

A count of 182,349,088 is returned rather than 182,348,084, because there are 1004 rows with a bounty of 0.

Even though we have an empty string in our query, it’s implicitly converted to 0.

And you thought you were so clever.

Badly

The exercises in futility that I see people carrying on with often look make use of ISNULL, COALESCE, and CASE expressions.

It’s worth noting here that COALESCE is only a CASE expression underneath, anyway. They are interchangeable in this respect.

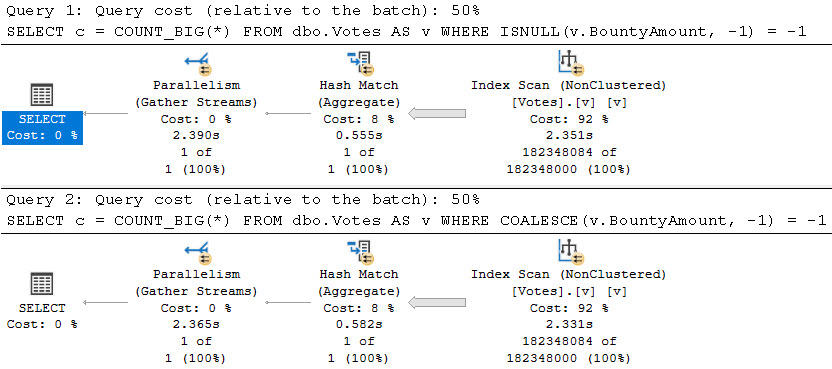

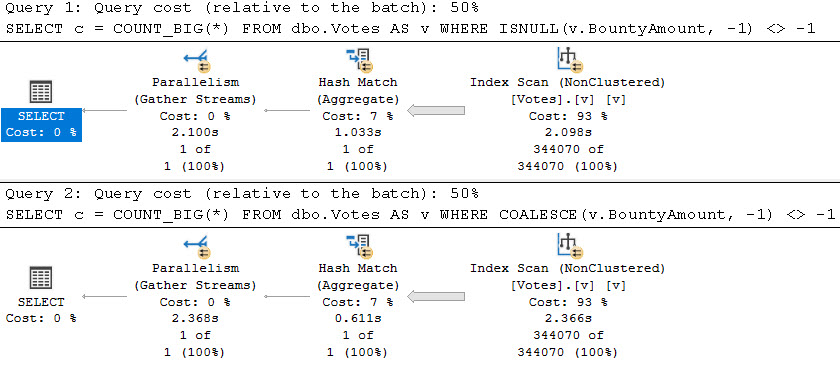

For findings NULLs, people will screw up and do this:

SELECT

c = COUNT_BIG(*)

FROM dbo.Votes AS v

WHERE ISNULL(v.BountyAmount, -1) = -1;

SELECT

c = COUNT_BIG(*)

FROM dbo.Votes AS v

WHERE COALESCE(v.BountyAmount, -1) = -1;

We can use -1 here because it doesn’t naturally occur in the data. Results are correct for both, but performance is comparatively horrible.

We’re looking at 2.5 seconds compared to 900ms. This situation gets worse with the more selective predicates, too.

These both take roughly the same time as the other unnatural forms of this query, but recall the natural version of this query finished in under 30ms.

Deadly

I hope I don’t have to write about this anymore, but at the rate I see people doing this stuff, I kind of doubt it.

Broken Record Enterprises, it feels like sometimes.

I’m not sure why anyone thinks this is a good idea. I’ve heard rumors that it comes from application developers who are used to NULLs throwing errors writing SQL queries, where they don’t pose the same threat.

Who knows, though. Maybe people just really like the festive pink text color that functions turn in SSMS.

Thanks for reading!

Going Further

If this is the kind of SQL Server stuff you love learning about, you’ll love my training. I’m offering a 75% discount to my blog readers if you click from here. I’m also available for consulting if you just don’t have time for that and need to solve performance problems quickly.

Related Posts

- Inline Table Valued Functions And Catch All Queries In SQL Server

- Why You’re Testing SQL Server Code For Performance The Wrong Way

- SQL Server 2022 CTP 2.1 Improvements To Parameter Sensitive Plan Optimization

- SQL Server 2022: Introduces the DATETRUNC Function, So You Can Truncate Your Dates And Stuff

What about the “optional parameter” isNull pattern? In limited testing, these two conditions receive similar index scan plans, perform exactly the same in terms of logical reads, and use nearly the same CPU (note that parameter “@param” in the example below is exactly the same data type as table column “colname”, so there is no implicit conversion, the table has approximately 1,000,000 rows, and there is an index on colname that INCLUDEs all of the selected columns):

WHERE (@param IS NULL OR colname = @param)

… versus …

WHERE colname = isNull(@param,colname)

If there is no implicit conversion, are there situations where isNull performs worse than (IS NULL OR =)?

Yes, they’re both equally trash.

You should be using dynamic SQL (or a recompile hint) for that pattern instead.

It has been enlightening!

The same considerations are also valid for Postgres?

Postgres doesn’t have ISNULL 😉

I like to make sure a column returned from a select doesn’t have a NULL value if it is a nullable column when the query is in an application. Much easier to handle this with a ISNULL(columnname,”) AS NoErrorBombsHere than to have to add error handling code throughout my application to handle NULL values.

Yeah, it’s a lot different in the select list than in the where clause (unless you have nested selects and filter on the expression).

This is a great article about null. Can I ask you I you have some way to tweek this pattern:

column1 = @variable1 or column1 is null

Regards,

Yeah, you definitely shouldn’t do that.

The link you sent it’s related to some logic based on the values of the parameters. This is another thing (as far as I can see :)). And in the end that is the thing. We offer a PaaS where customers got some flexibility. In this case, it’s related to boolean values 🙁 and when is not set it’s also null. Some kind of backward compatibility for older records.

I’m gonna say it one last time: you definitely shouldn’t do that.