Well, Stop

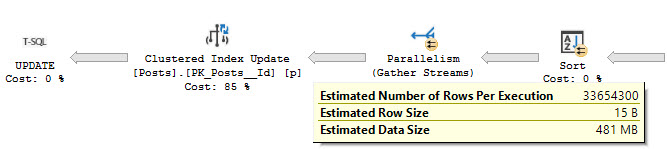

How many times have you seen something like this?

You can replace “Update” with “Insert” or “Delete”, but the story is the same.

Large modifications are typically not fast modifications, for a number of reasons. To understand why, we need to think about the process as a whole.

Modi-Fi

Why large modifications are slow can root-cause to a number of places:

- Updates and Deletes run single-threaded, no matter what

- Inserts can go parallel, but the rules are often prohibitive

- Halloween Protection Spools can be really slow

- You probably have a bunch of nonclustered indexes that need to be read so that…

- All those indexes can be modified, and written to the transaction log as well

- If your database is synchronously replicated anywhere, you have to wait on that ack

- There are no Batch Mode modifications

Usually when modifications need to hit a significant number of rows, I want to think about batching things. That might not always be possible, but it’s certainly a kinder way to do things.

If you can’t batch inserts and deletes, you may want to think about using partitioning to quickly switch data in and out.

And of course, don’t use MERGE.

Thanks for reading!

Going Further

If this is the kind of SQL Server stuff you love learning about, you’ll love my training. I’m offering a 75% discount to my blog readers if you click from here. I’m also available for consulting if you just don’t have time for that and need to solve performance problems quickly.

Is the MERGE stmt going to put a stick between the wheels? (as we literally say in Italy)

Holy ship!

Yes. Quite a stick.

Please, don’t spread myths about MERGE.

Please don’t be naive about merge. I see people running into both performance and data integrity issues when using it, frequently.