Strings Were A Mistake

While working with a client recently, we found that someone, at some point in time, probably during the original migration from Access, had chosen CHAR columns rather than VARCHAR columns.

Okay, fine. How bad could it be?

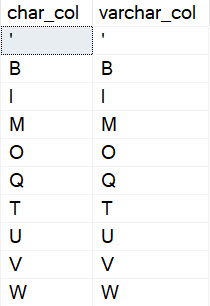

Bad enough that… A whole bunch of columns that only had a single character in them were stored in CHAR(1000) columns.

Which was wildly inflating memory grants, and causing all sorts of nasty issues.

Table create script it at the end of the post!

Del Granto

To show how you much different grants are for char vs varchar, I need to give you a little background on sort memory grant estimates.

When the optimizer estimates how much memory it’ll need, the calculation is based on the number and width of the rows that will have to get sorted.

For variable length string columns, it estimates that half the number of bytes will be filled. So if you have a varchar(100) column it’ll estimate 50 bytes will be filled for every row, and for an nvarchar(100) column it’ll estimate that 100 bytes will be filled for every row, because unicode characters are stored as double-byte to account for accent marks, etc.

So, yes, identically sized varchar and nvarchar columns will have different memory grant requirements.

And yes, identically sized (n)char and (n)varchar columns will also have different memory grant requirements.

Granto Loco

Let’s take these two queries, and let memory grant feedback right-size the grants for these two queries:

DECLARE

@c char(1000);

SELECT

@c = m.char_col

FROM dbo.murmury AS m

ORDER BY m.some_date DESC;

DECLARE

@v varchar(1000);

SELECT

@v = m.varchar_col

FROM dbo.murmury AS m

ORDER BY m.some_date DESC;

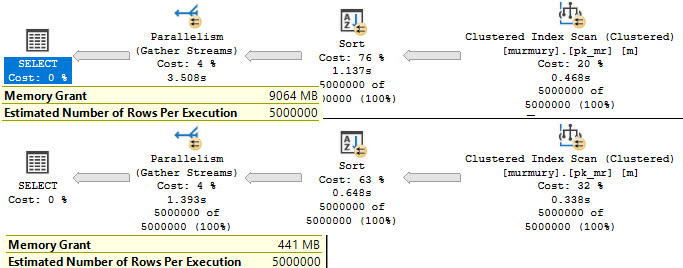

Here are the fully adjusted and stabilized grants:

Around 9GB vs 441MB. All because those CHAR columns are padded out with 999 empty characters.

So hey, if you need a reason to review schema for char column sanity, this just might be it.

Thanks for reading!

Going Further

If this is the kind of SQL Server stuff you love learning about, you’ll love my training. I’m offering a 75% discount to my blog readers if you click from here. I’m also available for consulting if you just don’t have time for that and need to solve performance problems quickly.

Scripto

DROP TABLE IF EXISTS dbo.murmury;

SELECT

ISNULL

(

x.n,

0

) AS id,

DATEADD

(

MINUTE,

x.n,

'20210101'

) AS some_date,

CONVERT

(

char(1000),

LEFT(x.text, 1)

) AS char_col,

CONVERT

(

varchar(1000),

LEFT(x.text, 1)

) AS varchar_col

INTO dbo.murmury

FROM

(

SELECT TOP (1000 * 5000)

ROW_NUMBER() OVER

(

ORDER BY

1/0

) AS n,

m.text

FROM sys.messages AS m

CROSS JOIN sys.messages AS m2

) AS x;

ALTER TABLE dbo.murmury

ADD CONSTRAINT pk_mr PRIMARY KEY CLUSTERED(id);

Along with the annoyance of having to seemingly always RTRIM() char data types when working with their contents. 🙁

It’s all terrible.

“It Depends”. You don’t have to do RTRIM with JOINs.

To clarify though — the memory grant *estimate* for CHAR should be twice that of the same-sized VARCHAR, correct? Or is it not that simple?

I would imagine it’s more complicated than that, but I don’t have any further details.

Si is probably correct. IIRC/Rumor has it, memory grants for VARCHAR() and other variable length datatypes are estimated to be half the length of the assigned width. Perhaps it’s also why some folks run into issues if they do things like assigning (for example) a VARCHAR(50) to a column that will almost always have (again, for example) at least 40 characters in the column. Of course, people would be loath to use a datatype/length of CHAR(50) for such a thing because of the 10 character “waste” (although that does prevent explosive page splits and the resulting fragmentation during “ExpAnsive” updates).

Heh… Now THERE’s an interesting interview question… (TAKE THAT GOOGLE!!!) 😀

“What is the possible reason for someone creating a VARCHAR(80) column and then constraining its maximum width to 50”?

BWAAAA-HAAAA_HAAA!!! Every time I see a migration gone bad like that, I have to look at the bright side… at least you won’t be able to blame fragmentation on “ExpAnsive” updates! 😀 😀 😀

Thanks for the post, Erik.

Fragmentation will get blamed as long as people have a way to defragment things, I think.